Use Local LLMs with Oracle AI Vector Search

There a few reasons why we would want to perform "generative AI" tasks, such as generating embeddings, summary, and text, using local resources, rather than relying on external, third-party providers. Perhaps the most important ones are data privacy, costs, and the convenience of choosing what large language models (LLMs) to use. About four months ago, I wrote that with the Oracle Database 23ai 23.5.0.24.06 release on the Oracle Autonomous Database (ADB), it was possible to create the credentials to access these third-party LLMs through their REST APIs. Fast-forward, my ADB now has the upgraded version 23.6.0.24.10 that has support for "local" third-party REST providers, specifically, Ollama. In this article, I will demonstrate how you can utilise other Oracle Cloud Infrastructure (OCI) managed resources to quickly get started.

Before starting on the tasks in this blog post, first check that the ADB instance that you have is already running the release 23.6.0.24.10.

select version_full from v$instance;

Deploying Ollama as a Container

Ollama is an open-source platform that allows users to run LLMs on any supported operating system, Windows, macOS, and Linux. The platform supports a large number of LLMs that are listed on this webpage. If necessary, developers may also choose to deploy a custom model.

Ollama runs locally on a workstation or server. In addition to providing an interactive command line interface to a LLM, it can serve REST endpoints to allow developers to perform a variety of generative AI tasks. Documentation for these tasks are available here.

Assuming that the ADB is running the said release, the next task is to run an instance of Ollama on the OCI so that the database can use it as a third-party REST provider. The application is installed either by downloading a binary, or for Linux, downloading and executing a shell-based installation script. Alternatively, Ollama can be deployed as a Docker container.

OCI Container Instance

There are several options for running Docker containers on the OCI. They are:

- Using an OCI Compute with Podman or Docker installed.

- Deploying the container on an OCI Container Instance.

- Deploying to an OCI Kubernetes Engine (OKE) cluster.

For a no-fuss deployment, I opted for (2).

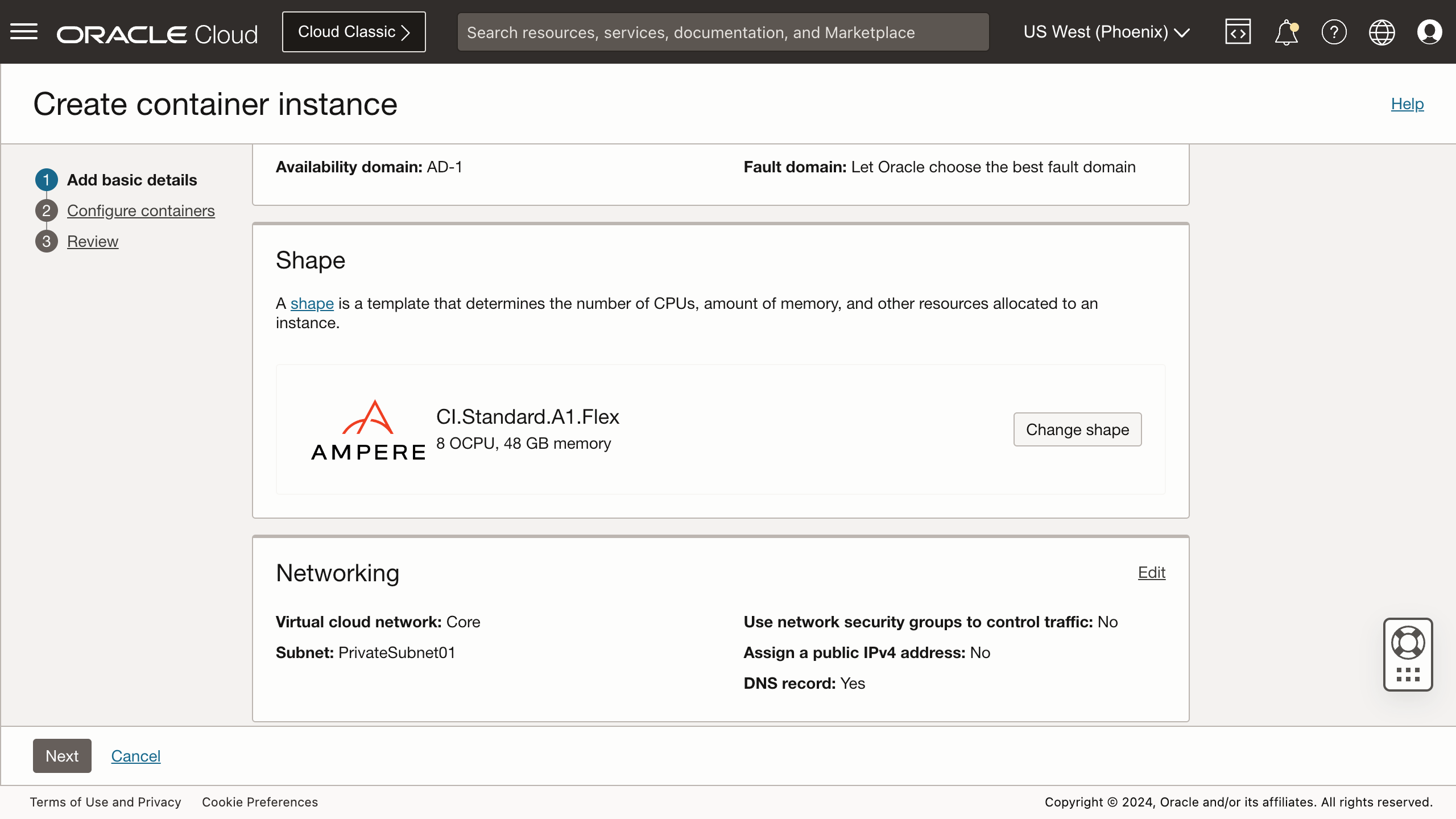

It is worthwhile noting that Ollama supports Nvidia CUDA and AMD ROCm, and so, if GPU acceleration is required, then choose either of the other two options. The OCI Container Instance service currently does not support GPU shapes. I don't have access to GPUs so that's a non-issue for me. And since, according to this article, ARMs perform comparably well with smaller LLMs, I went with an Ampere shaped instance equipped with 8 OCPU and 48 GB RAM that would cost me upwards of CAD 73.51 per month. The instance comes with 15 GB of ephemeral storage for free. Something to be mindful about is that these instance do not come with custom-sized persistent storage, and that can influence the number and sizes of LLMs installed.

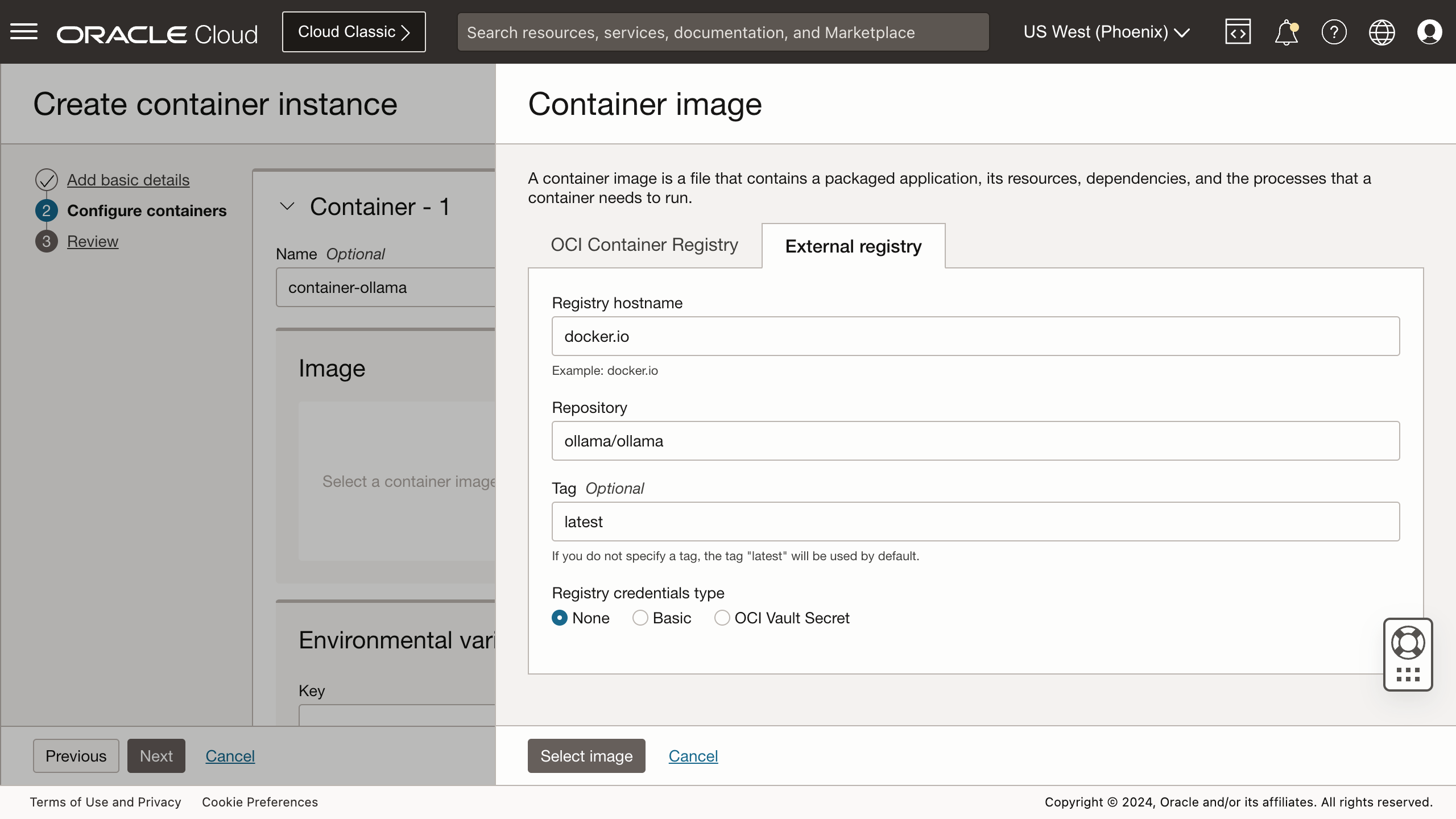

Deploying the container is simple. All I needed to do was to specify the external registry's hostname (https://docker.io), repository name (ollama/ollama), and tag (latest).

Once the container instance has been provisioned, and the Ollama container deployed, take note of the instance's IP address and set the environment variables ENDPOINT_IP_ADDRESS and ENDPOINT_PORT.

ENDPOINT_IP_ADDRESS=111.111.111.111

ENDPOINT_PORT=11434

By default, the port number for Ollama REST endpoints is 11434. Check that the server is running as expected.

curl -s http://$ENDPOINT_IP_ADDRESS:$ENDPOINT_PORT/api/version

It should return the version number of the Docker image that was used to create the container.

{"version":"0.3.14"}%

A freshly provisioned Ollama container will not have any models available. To add a model, use the pull endpoint to download the model.

curl http://$ENDPOINT_IP_ADDRESS:ENDPOINT_PORT/api/pull \

-d '{"name":"llama3.2"}'

Assuming that you have ./jq installed, list the available models, call the tags endpoint.

curl -s http://$ENDPOINT_IP_ADDRESS:$ENDPOINT_PORT/api/tags \

| jq -r '.models[] | { model: ."model", size: .size, parameterSize: .details.parameter_size, quantizationLevel: .details.quantization_level} | join("|")'

It should return an output similar to this:

llama3.2:1b|1321098329|1.2B|Q8_0

llama3.2:latest|2019393189|3.2B|Q4_K_M

OCI API Gateway

The Oracle Autonomous Database is designed to be security-first. Hence, there are several rules determining whether or not code may calling and consuming network resources, for example, REST endpoints. Here are some of them:

- The endpoint should be network resolvable. Use of IP addresses is not permitted.

- The endpoint must be secured with encryption, i.e., it must support SSL/TLS.

- The TLS certificate must be signed by an accepted certificate authority and verifiable.

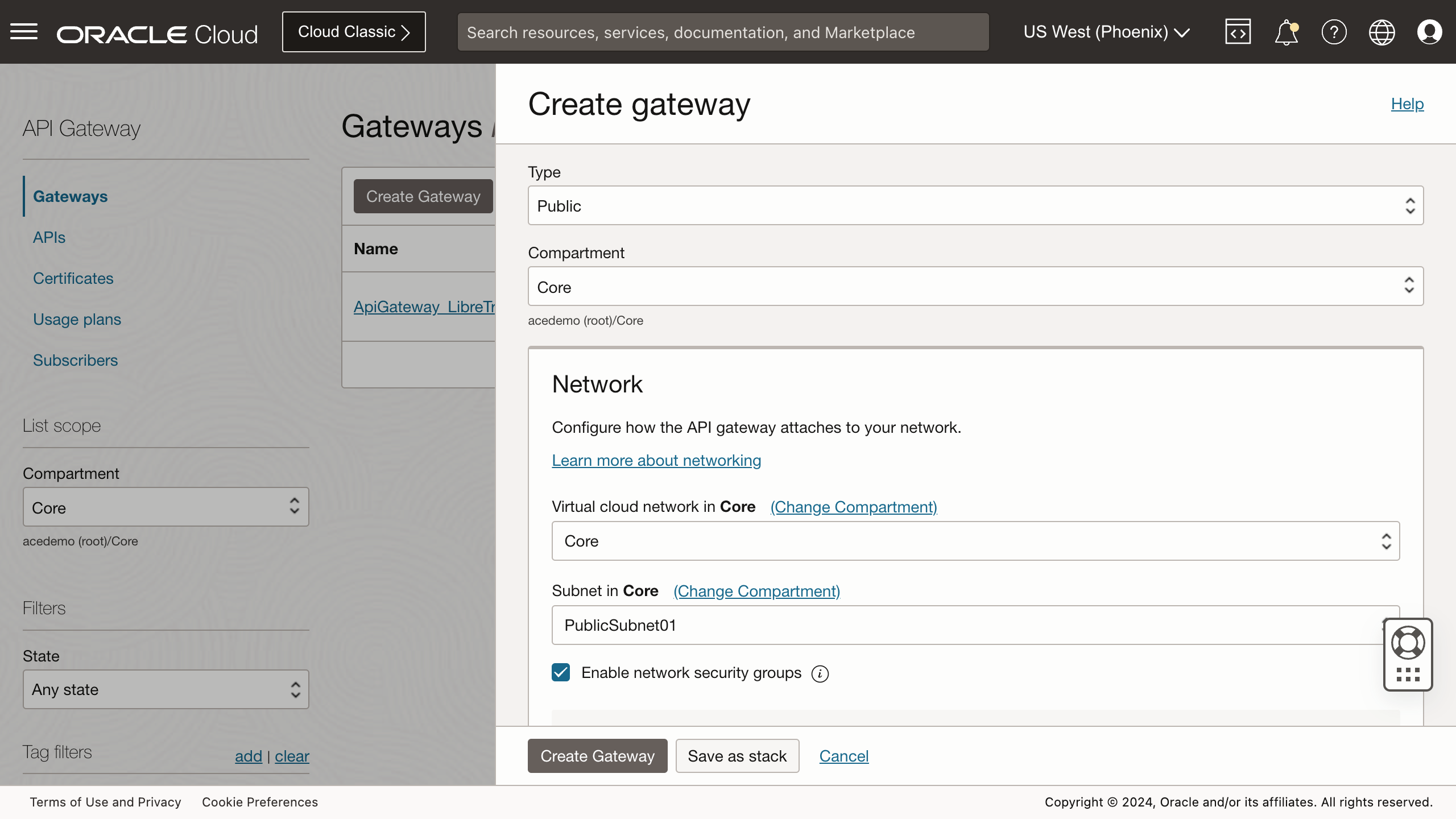

An API Gateway can be deployed with either a public or private endpoint. However, since my ADB instance is not on a private endpoint, I had to choose a public API gateway.

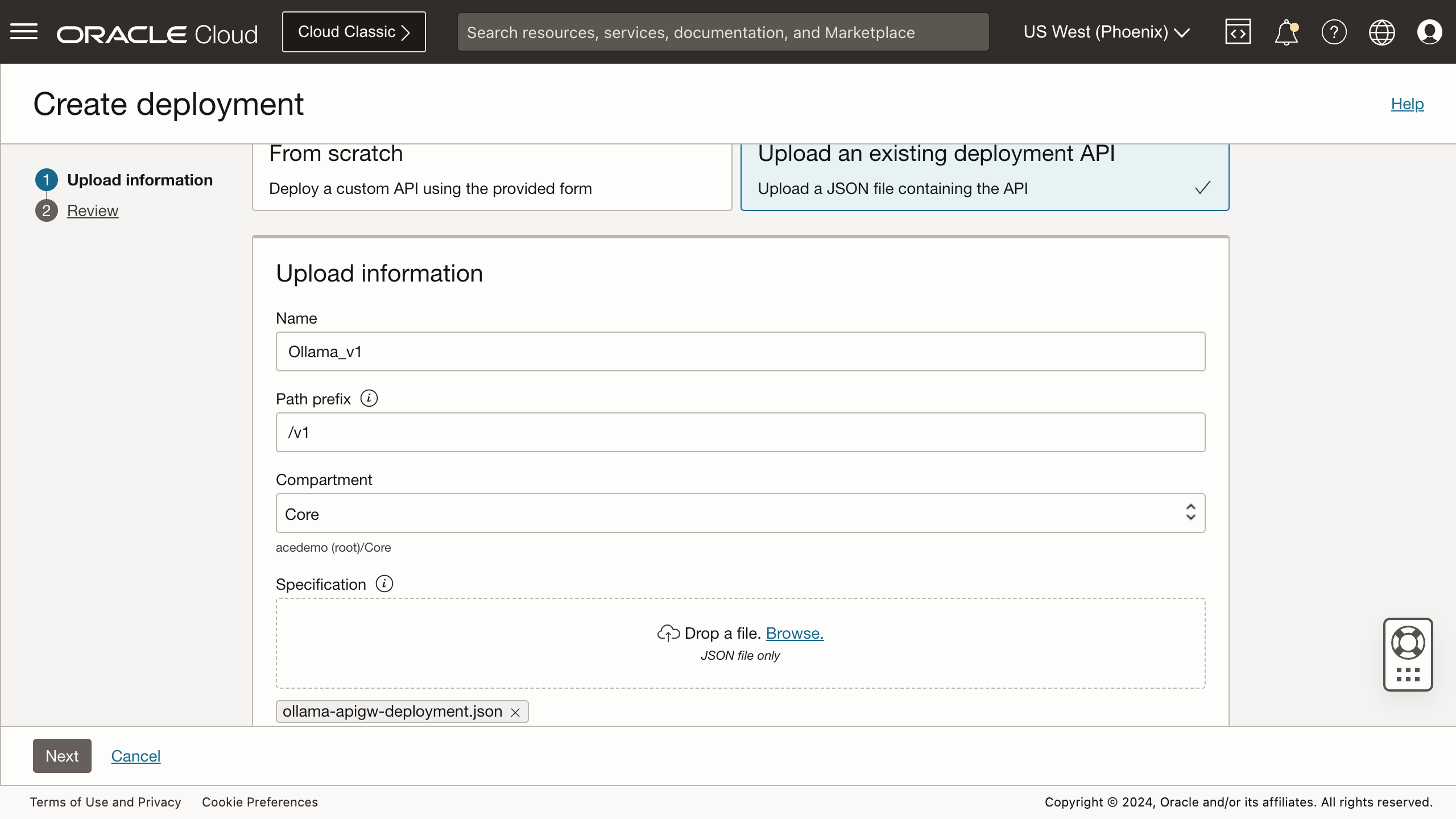

After the API gateway has been provisioned, create the deployments that map request paths to the various endpoints on the backend, the Ollama server. Creating deployments can either be done manually, or with a JSON file containing the specifications.

Fortunately, the Ollama API isn't too elaborate. For your convenience, here's a JSON that you can use as a starting template. Simply replace the placeholders {{ENDPOINT_IP_ADDRESS}} and {{ENDPOINT_PORT}}.

{

"requestPolicies": {},

"routes": [

{

"path": "/api/tags",

"methods": [

"GET"

],

"backend": {

"type": "HTTP_BACKEND",

"url": "http://{{ENDPOINT_IP_ADDRESS}}:{{ENDPOINT_PORT}}/api/tags"

}

},

{

"path": "/api/generate",

"methods": [

"POST"

],

"backend": {

"type": "HTTP_BACKEND",

"url": "http://{{ENDPOINT_IP_ADDRESS}}:{{ENDPOINT_PORT}}/api/generate"

}

},

{

"path": "/api/chat",

"methods": [

"POST"

],

"backend": {

"type": "HTTP_BACKEND",

"url": "http://{{ENDPOINT_IP_ADDRESS}}:{{ENDPOINT_PORT}}/api/chat"

}

},

{

"path": "/api/embed",

"methods": [

"POST"

],

"backend": {

"type": "HTTP_BACKEND",

"url": "http://{{ENDPOINT_IP_ADDRESS}}:{{ENDPOINT_PORT}}/api/embed",

}

}

]

}

NOTE

With the exception of the tags endpoint, I had deliberately left out all the others that are not involved in generative AI tasks, for example, pulling and deleting models on the Ollama server.

Congratulations! Once the deployments are completed, grab the API gateway's hostname, and validate the setup by running a query to list the available models on the Ollama server.

select

model_name

, round(model_size/1024/1024/1024, 2) model_size_gb

, parameter_size

, quantization_level

from json_table(

apex_web_service.make_rest_request(

p_http_method => 'GET'

, p_url => 'https://{{UNIQUE_CODE}}.apigateway.{{REGION}}.oci.customer-oci.com/v1/api/tags'

)

, '$.models[*]' columns (

model_name varchar2(20) path '$.model'

, model_size number path '$.size'

, parameter_size varchar2(20) path '$.details.parameter_size'

, quantization_level varchar2(20) path '$.details.quantization_level'

)

);

Similar results like this are expected.

MODEL_NAME MODEL_SIZE_GB PARAMETER_SIZE QUANTIZATION_LEVEL

-------------------- ------------- -------------------- --------------------

llama3.2:1b 1.23 1.2B Q8_0

llama3.2:latest 1.88 3.2B Q4_K_M

Finally, test this cool new generative AI feature using a similar SQL query.

select

dbms_vector.utl_to_generate_text(

data => 'In one sentence, who founded Oracle?'

, params => json(

json_object(

key 'provider' value 'ollama'

, key 'host' value 'local'

, key 'url' value 'https://{{UNIQUE_CODE}}.apigateway.{{REGION}}.oci.customer-oci.com/v1/api/generate'

, key 'model' value 'llama3.2:1b'

, key 'options' value json_object(

key 'temperature' value 0.2

, key 'num_predict' value 20

)

)

)

) as response

;

If successful, you will get a (hopefully) meaningful response.

RESPONSE

--------------------------------------------------------------------------------

Oracle was founded by Larry Ellison, Bob Miner, and Ed Oates in 1977.

Closing and Caution

Supporting Ollama as an alternative LLM provider for generative AI tasks is a great addition to the Oracle Database 23ai. It will give developers and architects access to a variety of LLMs to integrate into applications and solutions. However, keep in mind that Ollama is open-source, relatively new, and no obvious options for obtaining commercial support. Whether it is production ready or not is debatable. The cost-benefit ratio of self-hosting these LLMs also need to be considered.

Finally, my focus in this blog post was to test the feature. A lot still needs to be done to secure the Ollama REST endpoints. If you'd like to discuss this path further, please do not hesitate to reach out.

Thanks for reading!