Give Your Oracle APEX Apps Eyes, Brains, and a Voice

ICYMI, earlier this month, Oracle announced the general availability of Meta's Llama 3.2 90B and 11B models on the OCI Generative AI (GenAI) service. As always, Oracle APEX is a great web development platform to give this new multimodal large language model (LLM) a spin. Here's a quick writeup on what I learned over the last weekend.

First, here's a screen recording of a simple web application that lets the user upload a photo, and let the GenAI service generate a description of the contents of the submission. As a dessert, the description is then sent to the OCI Speech service's text to speech (TTS) endpoint to generate an audio description.

GenAI Vision LLM for Generating Descriptive Text

Keep in mind that this is a very simplistic application to showcase and demonstrate the two Oracle AI services. In this application, there is a page for uploading the photo. And on this page, a PL/SQL procedure is called when the page is submitted. It then uses the GenAI service endpoint to generate text that describes the submitted image.

declare

l_response clob;

l_request_body clob;

l_response_object json_object_t;

begin

select

json_object(

key 'compartmentId' value :G_COMPARTMENT_ID

, key 'servingMode' value json_object(

key 'servingType' value 'ON_DEMAND'

, key 'modelId' value :G_MULTIMODAL_LLM_NAME

)

, key 'chatRequest' value json_object(

key 'messages' value json_array(

json_object(

key 'role' value 'USER'

, key 'content' value json_array(

json_object(

key 'type' value 'TEXT'

, key 'text' value :G_PROMPT

)

, json_object(

key 'type' value 'IMAGE'

, key 'imageUrl' value json_object(

key 'detail' value 'AUTO'

, key 'url' value 'data:' || mime_type || ';,'

|| apex_web_service.blob2clobbase64(

p_blob => image_blob

, p_newlines => 'N'

, p_padding => 'N')

)

)

)

)

)

, key 'apiFormat' value 'GENERIC'

, key 'maxTokens' value 500

, key 'temperature' value 0.1

, key 'frequencyPenalty' value 0

, key 'presencePenalty' value 0

, key 'topP' value 0.75

, key 'topK' value -1

, key 'isStream' value false

, key 'isEcho' value false

) returning clob

)

into l_request_body

from photo

where photo_id = :P2_PHOTO_ID;

apex_web_service.set_request_headers(

p_name_01 => 'Content-type'

, p_value_01 => 'application/json'

);

l_response := apex_web_service.make_rest_request(

p_http_method => 'POST'

, p_url => :G_BASE_ENDPOINT_URL

|| '/20231130/actions/chat'

, p_credential_static_id => :G_OCI_CREDENTIAL

, p_body => l_request_body

);

if apex_web_service.g_status_code = 200 then

l_response_object := json_object_t.parse(l_response);

:P2_DESCRIPTION :=

treat(

treat(

l_response_object

.get_object('chatResponse')

.get_array('choices')

.get(0)

as json_object_t

).get_object('message')

.get_array('content')

.get(0)

as json_object_t

).get_string('text')

;

update photo

set description = :P2_DESCRIPTION

where photo_id = :P2_PHOTO_ID;

else

raise_application_error(-20001, l_response);

end if;

end;

The procedure uses the newer chat API that was announced earlier in June this year.

NOTE

At the time of writing, the generation and summarization models have been deprecated, and it appears all the models available for the Generate Text endpoints are no longer available in the Chicago region. For example, the demo in this post no longer works. Be sure to check and track when the GenAI models retire, especially if they are used in production environments.

The chat API allows developers to include one more messages, for example, one could include the conversational history between the agent and user. However, for my purpose, I will only need to send one message. The message contains a content attribute that is an array of objects that can be of either the TEXT or IMAGE types. The request here contains both types. The first contains the prompt G_PROMPT to "instruct" what the LLM should return. This is set as a substitution string with the value:

Describe what is in the image. Your response should be in one paragraph, and a maximum of 50 words.

The submitted photo is added to the message as a second content object. The image is stored as a BLOB in the database, so it has to be converted into a base64-encoded string, and then included in the JSON request payload using the data URI scheme.

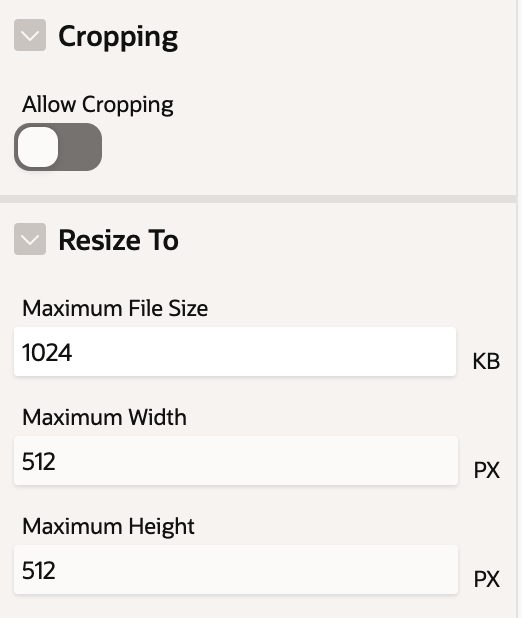

I had some initial problems uploaded images that were too large. The documentation states that the images should be 5 MB or If you face such an issue, I would suggest using the Oracle APEX Image Upload page item's built-in capability to reduce the image's resolution and/or file size before sending the request. It is also worthwhile noting that the maximum user prompt and response size is 128,000 tokens. A 512x512 image converts to approximately 1,610 tokens.

Based on the article and the release notes, the multimodal models are available in US Midwest (Chicago), UK South (London), Brazil East (Sao Paulo), and the European Union if you ag. However, it seems like I currently only have access to the 90B parameter model, so the G_MULTIMODAL_LLM_NAME substitution value is set to meta.llama-3.2-90b-vision-instruct.

Speech for Generating an Audio Description

The text-to-speech (TTS) feature in the OCI Speech service is relative new. Unlike its speech-to-text counterpart, the API is relatively more straightforward to use. The PL/SQL procedure below demonstrates all that is needed to create an audio file using the generated text.

declare

l_response blob;

l_request_body clob;

begin

apex_web_service.set_request_headers(

p_name_01 => 'Content-type'

, p_value_01 => 'application/json'

);

l_response := apex_web_service.make_rest_request_b(

p_http_method => 'POST'

, p_url => 'https://speech.aiservice.us-phoenix-1.oci.oraclecloud.com'

|| '/20220101/actions/synthesizeSpeech'

, p_credential_static_id => :G_OCI_CREDENTIAL

, p_body => json_object(

key 'compartmentId' value :G_COMPARTMENT_ID

, key 'isStreamEnabled' value false

, key 'text' value apex_escape.json(:P2_DESCRIPTION)

, key 'configuration' value json_object(

key 'modelFamily' value 'ORACLE'

, key 'modelDetails' value json_object(

key 'modelName' value 'TTS_2_NATURAL'

, key 'voiceId' value 'Annabelle'

)

, key 'speechSettings' value json_object(

key 'textType' value 'TEXT'

, key 'sampleRateInHz' value 24000

, key 'outputFormat' value 'OGG'

)

)

)

);

if apex_web_service.g_status_code = 200 then

update photo

set audio_description_blob = l_response

, audio_mime_type = 'audio/ogg'

where photo_id = :P2_PHOTO_ID;

else

raise_application_error(-20002, 'Error generating audio.');

end if;

end;

The SynthesizeSpeech endpoint is a POST endpoint that takes a JSON request payload, and returns the generated audio as a binary object. The JSON contains an attribute configuration that details the format, quality, and characteristics of the audio file produced.

NOTE

The TTS feature supports SSML or Speech Synthesis Markup Language. I envisage that we could generate a more expressive speech with the appropriate prompt.

Costs of Services

From the OCI price list, the Meta Llama 3.2 90B Vision model currently costs CAD 0.006553 per 10K transactions (characters). However, I haven't quite figured out how to convert a binary image file to calculate the number of characters. Could it be the length of the base64-encoded string? If you figure that out, please share with me. Meanwhile, I would reduce the resolution of the image that is sent to the service. I have found the model to perform almost just as well with smaller resolution pictures.

As for the TTS service, I haven't seen a price tag. 😉

Closing

I am excited about the potential for creating applications that can help the visually impaired navigate and understand the world around them with the combination of both technologies. And I hope it is evident that with Oracle APEX, creating these types of applications are not hard, so I hope this short writeup inspires you to do some good. We definitely need a lot of that right now. If there's a social good project that I could help or contribute to, please do not hesitate to reach out!

Credits