GenAI Made Easy

A new year, a new product! Yesterday, Oracle announced the general availability of the OCI Generative AI (GenAI) service. In my final blog post last year, I briefly looked at the beta information that was available to the public, and suggested how you can quite easily add powerful GenAI capability in Oracle APEX applications by calling the REST APIs using APEX_WEB_SERVICE. With the service in GA, I am now finally able to take a deeper dive and hopefully, help you get started.

First, What You Need to Know...

The GenAI service is the newest of Oracle's portfolio of AI-focused OCI offerings. At this time, the service is only available in the US Midwest (Chicago) region. If you aren't already subscribed to this region, you can easily do this through the OCI Console. If you are on a trial account, and Chicago's not your home region, you might have to request for a service limit increase. In my personal experience, it can be an uphill task.

IMPORTANT UPDATE

Please ignore the following discussion on costs. Thanks to Wendy at Oracle for pointing out, as-of 27-JAN-2024, the OCI cost estimator has not been updated to correctly reflect the pricing model. You can get the current price list from here. The "transactions" in the price list translates to "characters". At the end of the month, all the characters across all models and requests are tallied and you're charged accordingly.

At the moment, here are the prices for the four available models:

Model Cost per 10K transactions (CAD) Cohere (small) 0.0052424 Cohere (large) 0.02870214 Llama2-70 0.019659 Embedding 0.0013106

| Model | Cost (CAD) |

|---|---|

| Cohere (small) | 0.05 |

| Cohere (large) | 0.26 |

| Llama2-70 | 0.18 |

| Embedding | 0.01 |

Rates are based on 1 request with an input and output length of 1. If you're familiar with other similar GenAI services, I believe this is akin to tracking input and output tokens. To track my costs, I would think of adding a table to store the input and output lengths of each request. Thereafter, it would be a fairly trivial task to understand and forecast your usage, and hey, maybe even bill your customers. 😉

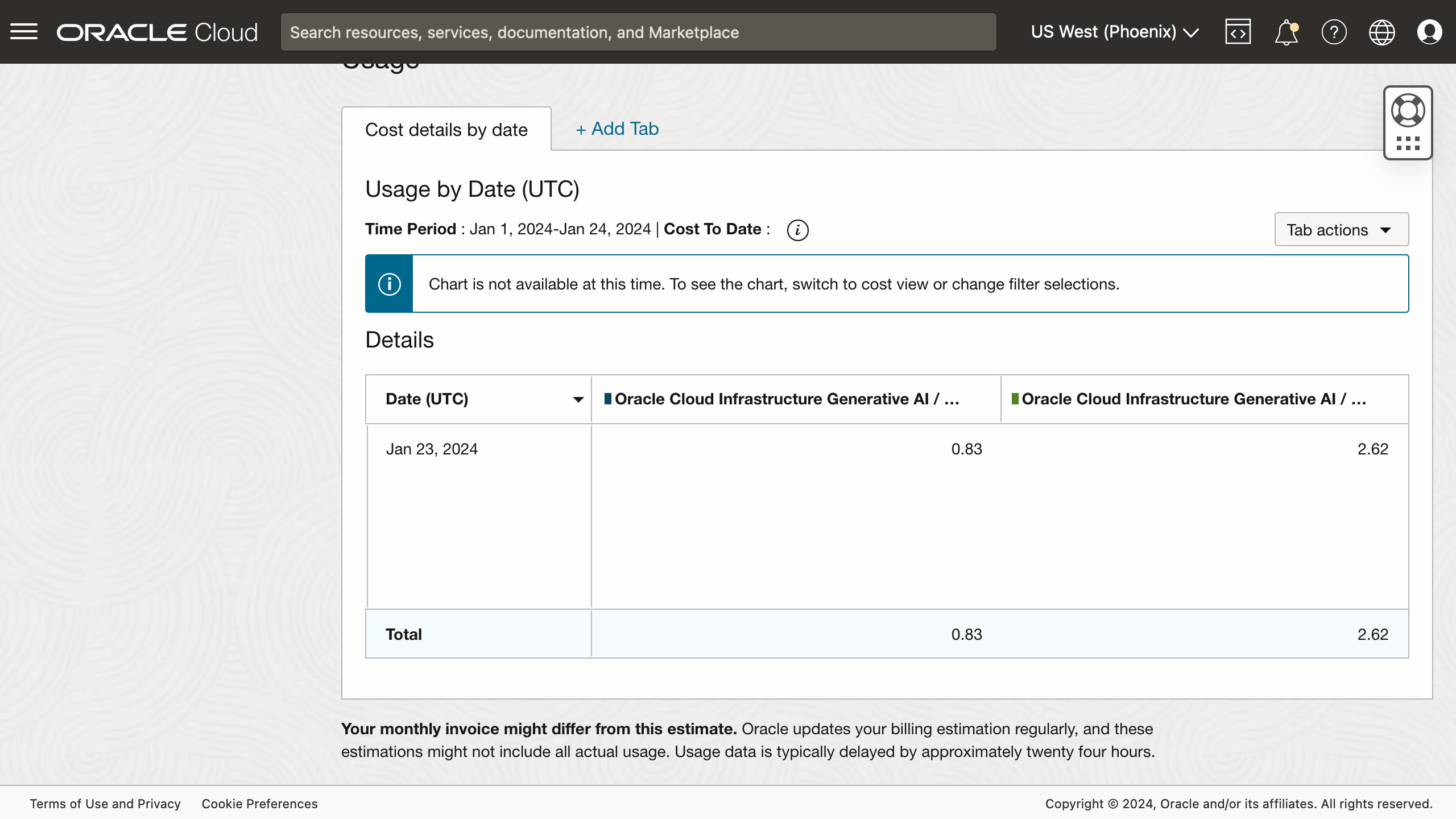

You could also monitor your usage and costs using the OCI Cost Analysis tool. Filtering on the service GENERATIVE_AI, you will find that each model's usage and costs are tracked separately.

To use the service, either you are an OCI administrator, or belong to an IAM (Identity and Access Management) group that has been granted access to the service. An IAM policy with the following statement should do the trick.

allow group <group-name> to manage generative-ai-family in compartment <compartment-name>

NOTE

For the purpose of calling the GenAI inference tasks, I have found that the verb use is probably sufficient. Just a habit of providing the least privileges.

allow group <group-name> to use generative-ai-family in compartment <compartment-name>

The Playground

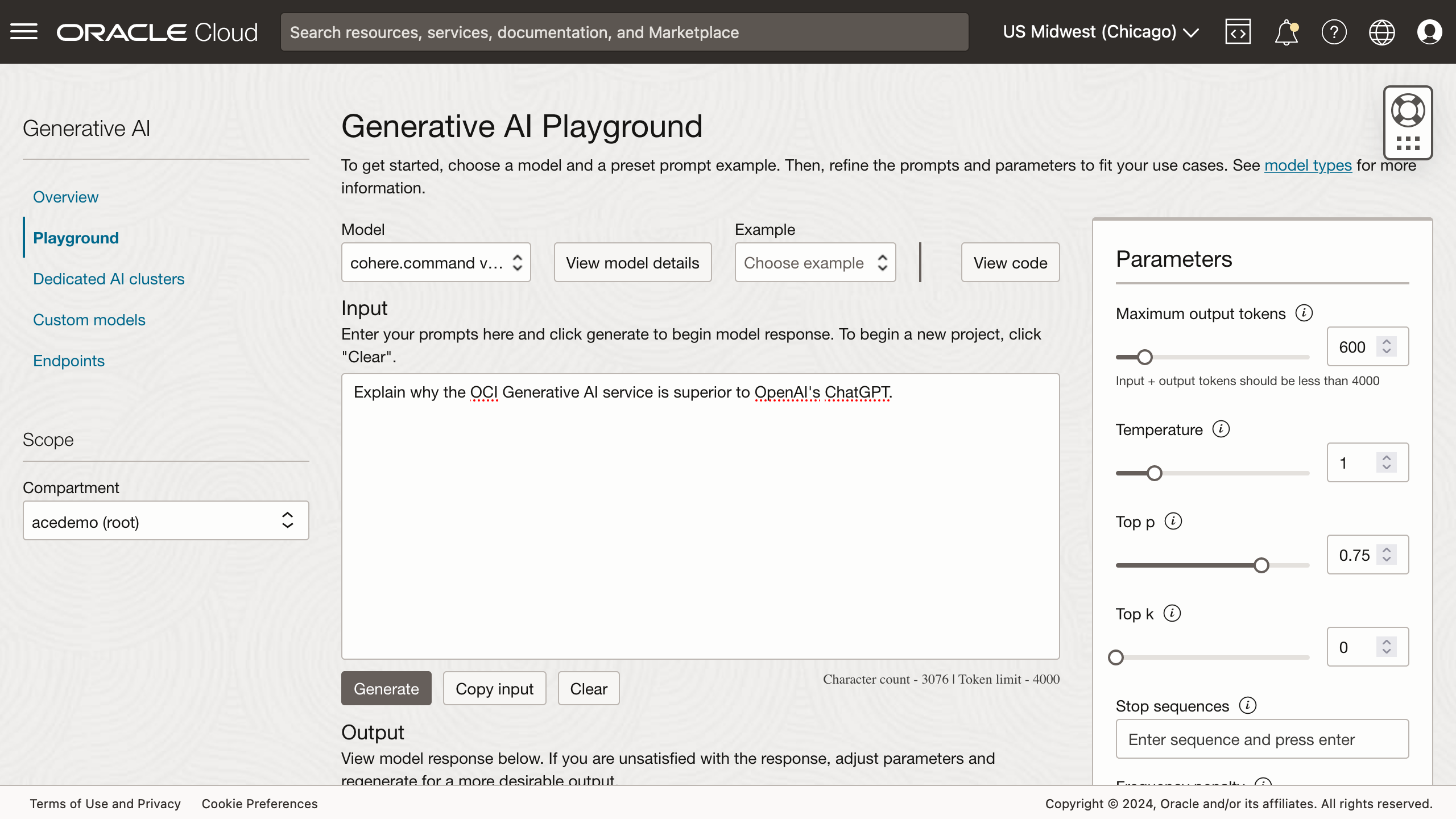

This allows you to access the GenAI playground where you can try out the different tasks and models. Like many of its competitors, through the user interface, you can modify various parameters that influences the resulting text.

It's a great starting place to explore concepts, and test your prompts. For example, using the playground, I could see how one-shot learning might help with generating a response given a "fill in the blanks" question.

Here's a prompt without seeding any examples:

A bus has ___ seats.

The generated output is:

There is no definitive answer to this question as it could depend on several factors such as the type of bus, the model and the manufacturer. However, I can provide you with some information on the average number of seats you would typically find on a bus.

Typically, a standard bus would have around 30 to 50 seats. However, school buses tend to have more seats. They can typically accommodate around 48 to 80 passengers, depending on the size of the bus. Shuttle buses, on the other hand, tend to have fewer seats to provide more space for luggage. Airport shuttle buses can have seats for as few as 12 passengers up to around 30 passengers.

It's also important to note that the number of seats in a bus can be influenced by local regulations and standards. For example, buses operating in North America must comply with Federal Motor Vehicle Safety Standards, which set specific requirements for the number of seats and the space between them to ensure passenger safety.

In summary, the number of seats on a bus can vary widely depending on the type of bus and regulatory standards. While a typical bus may have around 30 to 50 seats, school and shuttle buses can have fewer or more seats depending on their intended use.

Not what I had expected. Let's try that by infusing one example question and response:

Input:

A car has ___ wheels.

Output:

4

===

Input:

A bus has ___ seats.

Output:

This time, the model returns:

50

Perfect! Now I know how I should construct my prompt in the kidz quiz application! 😉

The REST APIs

In some cases, users may find it necessary to fine-tune a foundation model to generate more relevant responses. This is a highly compute intensive task that often requires a GPU compute cluster to complete the learning process within the 21st century. I won't go into details in this post except to say that users can provision dedicated "AI" clusters to train and host models. And it looks like the product development team made a good decision to split the inference and management APIs into two different categories: Generative AI Service Inference API and Generative AI Service Management API.

Let's delve into the inference APIs that provide us the same set of tasks that was discussed in the previous writeup. The first thing to note is that the endpoint URL is now https://inference.generativeai.us-chicago-1.oci.oraclecloud.com. The inputs and outputs have also changed from what was in the beta documentation, so please ignore almost everything that I wrote in the last post about them. "Almost" because the methodology remains unchanged.

Let's look at the GenerateTextResult task/action for starters. When the GenAI service was first announced at Oracle CloudWorld 2023, the general expectation was that only Cohere models were supported. So, it was a pleasant surprise that we now also have Meta's Llama2 70B parameter model available for text generation tasks.

As a reminder, there are currently three capabilities that the GenAI supports:

- Text generation

- Text summarization; and

- Text embeddings

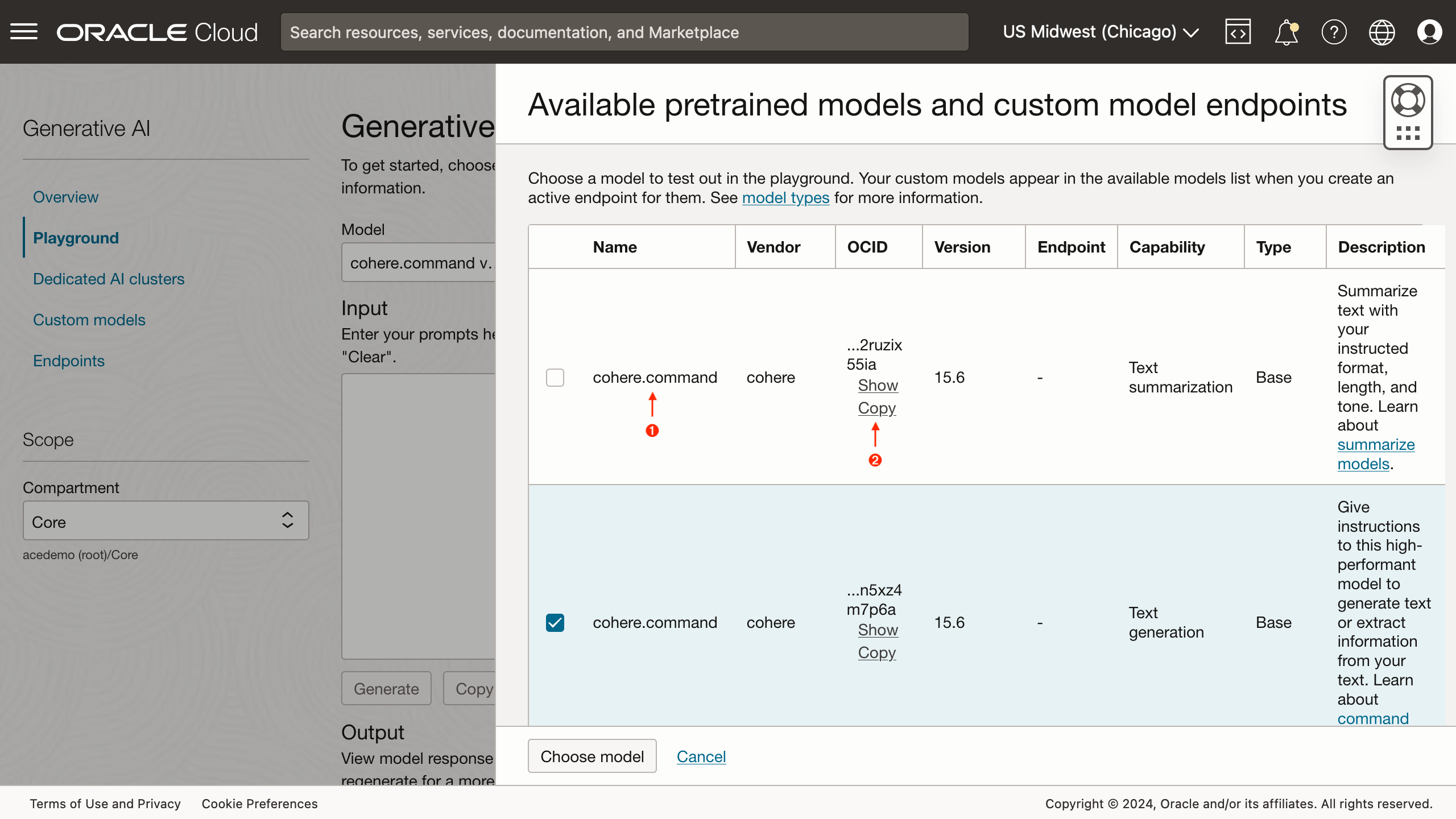

To see a full list of supported pretrained models, and if you can afford it, custom models, go to the playground, look for the button View model details, and click it. There's an API for it, but why bother. 😉 You should find the list of model names (labelled 1 in the diagram) and OCIDs (2), either of which you will need to invoke the REST APIs, so take a note of them.

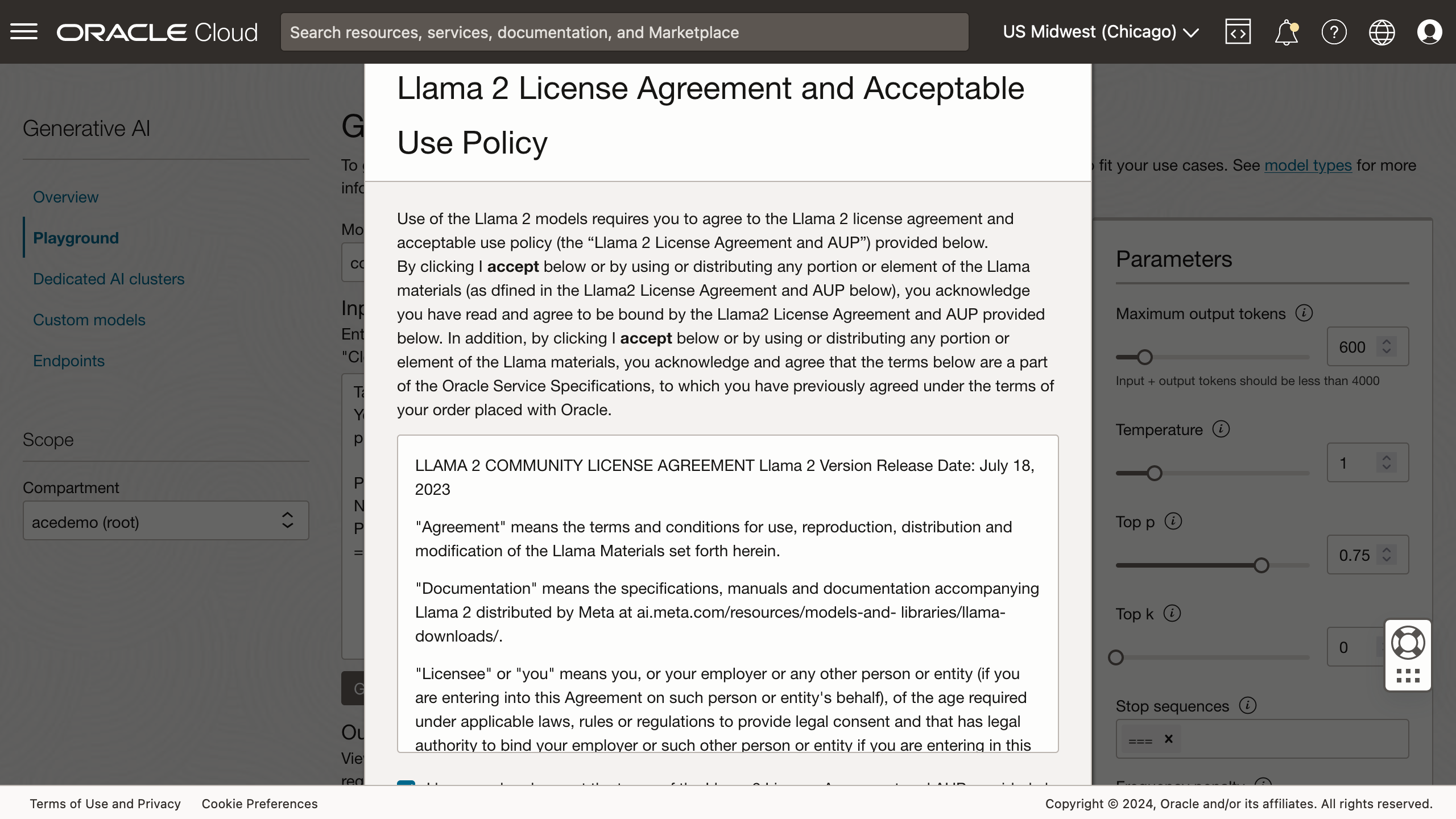

For my quick demo, I'm going to use the Llama2 model, and if you're familiar with its usage, there are certain terms and conditions that you need to agree to. You'll see this when you switch the model in the playground the first time.

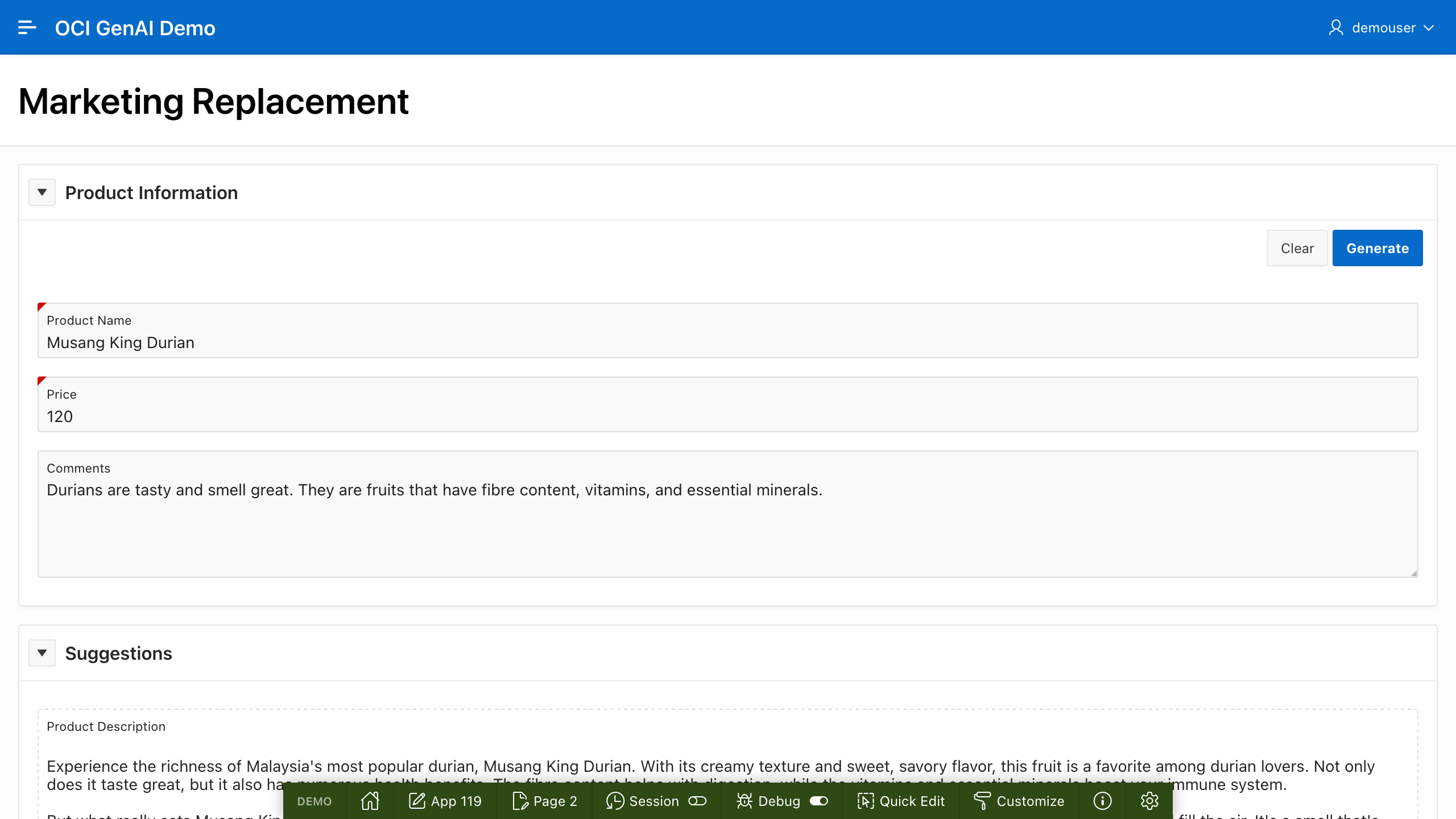

My goal is to replace the marketing folks at the company I work at, Contoso Food Enterprises Inc. Sales is plummeting, and recently, we have resorted to offering 50% discounts on expiring food products that we were selling at the stores. We need urgent help from GenAI. My goal is to generate product descriptions that make them compelling to buy. After a few trials with the GenAI playground, I settled on this prompt:

Task:

- You are a creative writer. Using the production information provided, write a product description that will increase product sales.

- Use a maximum of 250 words.

Product Information:

Name - {{PRODUCT_NAME}}

Price - ${{PRICE}}

Comments - {{COMMENTS}}

Product Description:

===

And then created this app:

I assume that you're an expert Oracle APEX developer, so there's no need for me to get GenAI to help me write a few paragraphs here on how to get started on creating an application, page, add page items, buttons, and then a page process to call the endpoint and parse the results. You will need to an OCI web credential, and if you are not familiar with doing that, shameless plug, I highly recommend checking out my Oracle LiveLabs workshop on using OCI Object Storage with Oracle APEX. There are detailed instructions on setting up an IAM user, group, generating API keys, and then finally creating the web credentials. Just remember to add the required IAM policy to allow this user to use the GenAI services.

I've masked some of the important variables that are unique to your setup, so please, create the necessary substitution strings and replace them with the values.

declare

c_prompt_template varchar2(32767) := trim(q'[

Task:

- You are a creative writer. Using the production information provided, write a product description that will increase product sales.

- Use a maximum of 250 words.

Product Information:

Name - {{PRODUCT_NAME}}

Price - ${{PRICE}}

Comments - {{COMMENTS}}

Product Description:

===

]');

l_prompt varchar2(32767);

l_response clob;

l_results json_object_t;

l_product_description varchar2(32767);

begin

-- Prepare your prompt with the page item values on your page.

l_prompt := regexp_replace(

regexp_replace(

regexp_replace(c_prompt_template, '{{PRODUCT_NAME}}', :P2_PRODUCT_NAME)

, '{{PRICE}}', :P2_PRICE

)

, '{{COMMENTS}}', :P2_COMMENTS

);

apex_web_service.g_request_headers(1).name := 'Content-Type';

apex_web_service.g_request_headers(1).value := 'application/json';

-- Call the appropriate endpoint, delivering the JSON-formatted payload

-- as required.

l_response := apex_web_service.make_rest_request(

p_url => :G_ENDPOINT_URL || '/20231130/actions/generateText'

, p_http_method => 'POST'

, p_body => json_object(

key 'compartmentId' value :G_COMPARTMENT_OCID

, key 'inferenceRequest' value json_object(

key 'prompt' value apex_escape.json(l_prompt)

, key 'maxTokens' value 400

, key 'isStream' value false

, key 'numGenerations' value 1

, key 'runtimeType' value 'LLAMA'

, key 'frequencyPenalty' value 0

, key 'presencePenalty' value 0

, key 'temperature' value 0.5

, key 'topP' value 0.75

, key 'topK' value -1

, key 'stop' value json_array('===')

)

, key 'servingMode' value json_object(

key 'servingType' value 'ON_DEMAND'

-- For the modelId, we could use its name "meta.llama-2-70b-chat".

, key 'modelId' value 'ocid1.generativeaimodel.oc1.us-chicago-1.amaaaaaask7dceyai3pxxkeezogygojnayizqu3bgslgcn6yiqvmyu3w75ma'

)

)

, p_credential_static_id => :G_OCI_CREDENTIALS

);

-- Parse the JSON-formatted response

l_results := json_object_t.parse(l_response);

-- Replace those nasty "\n"

l_product_description := treat(l_results.get_object('inferenceResponse')

.get_array('choices').get(0) as JSON_OBJECT_T).get_string('text');

-- That's it. Display the results.

:P2_PRODUCT_DESCRIPTION := regexp_replace(l_product_description, '\\n', '<br/>');

end;

The JSON payload that we send to the endpoint would look like this:

{

"compartmentId": "<compartment-ocid>",

"inferenceRequest": {

"prompt":"\005C\005CnTask:\005C\005Cn- You are a creative writer. Using the production information provided, write a product description that will increase product sales. \005C\005Cn- Use a maximum of 250 words.\005C\005Cn\005C\005CnProduct Information:\005C\005CnName - Musang King Durian\005C\005CnPrice - $120\005C\005CnComments - Durians are tasty and smell great. They are fruits that have fibre content, vitamins, and essential minerals.\005C\005Cn\005C\005CnProduct Description:\005C\005Cn\005C\005Cn===\005C\005Cn",

"maxTokens": 400,

"isStream": false,

"numGenerations": 1,

"runtimeType": "LLAMA",

"frequencyPenalty": 0,

"presencePenalty": 0,

"temperature": 0.5,

"topP": 0.75,

"topK": -1,

"stop": [

"==="

]

},

"servingMode": {

"servingType": "ON_DEMAND",

"modelId": "ocid1.generativeaimodel.oc1.us-chicago-1.amaaaaaask7dceyai3pxxkeezogygojnayizqu3bgslgcn6yiqvmyu3w75ma"

}

}

Note that the text generation task supports both Cohere and Llama2 models. The inferenceRequest is an element of type LlmInferenceRequest, and it has two subtypes: CohereLlmInferenceRequest and LlamaLlmInferenceRequest. They have similar but not the same set of attributes, so pay attention to those, and refer to the documentation for details on each parameter that you can tweak.

Similarly, the text generation results as an inferenceResponse attribute that is of type LlmInferenceResponse, and it has subtypes for both Cohere and Llama2 responses.

{

"modelId": "ocid1.generativeaimodel.oc1.us-chicago-1.amaaaaaask7dceyai3pxxkeezogygojnayizqu3bgslgcn6yiqvmyu3w75ma",

"modelVersion": "1.0",

"inferenceResponse": {

"runtimeType": "LLAMA",

"created": "2024-01-25T03:57:33.985Z",

"choices": [

{

"index": 0,

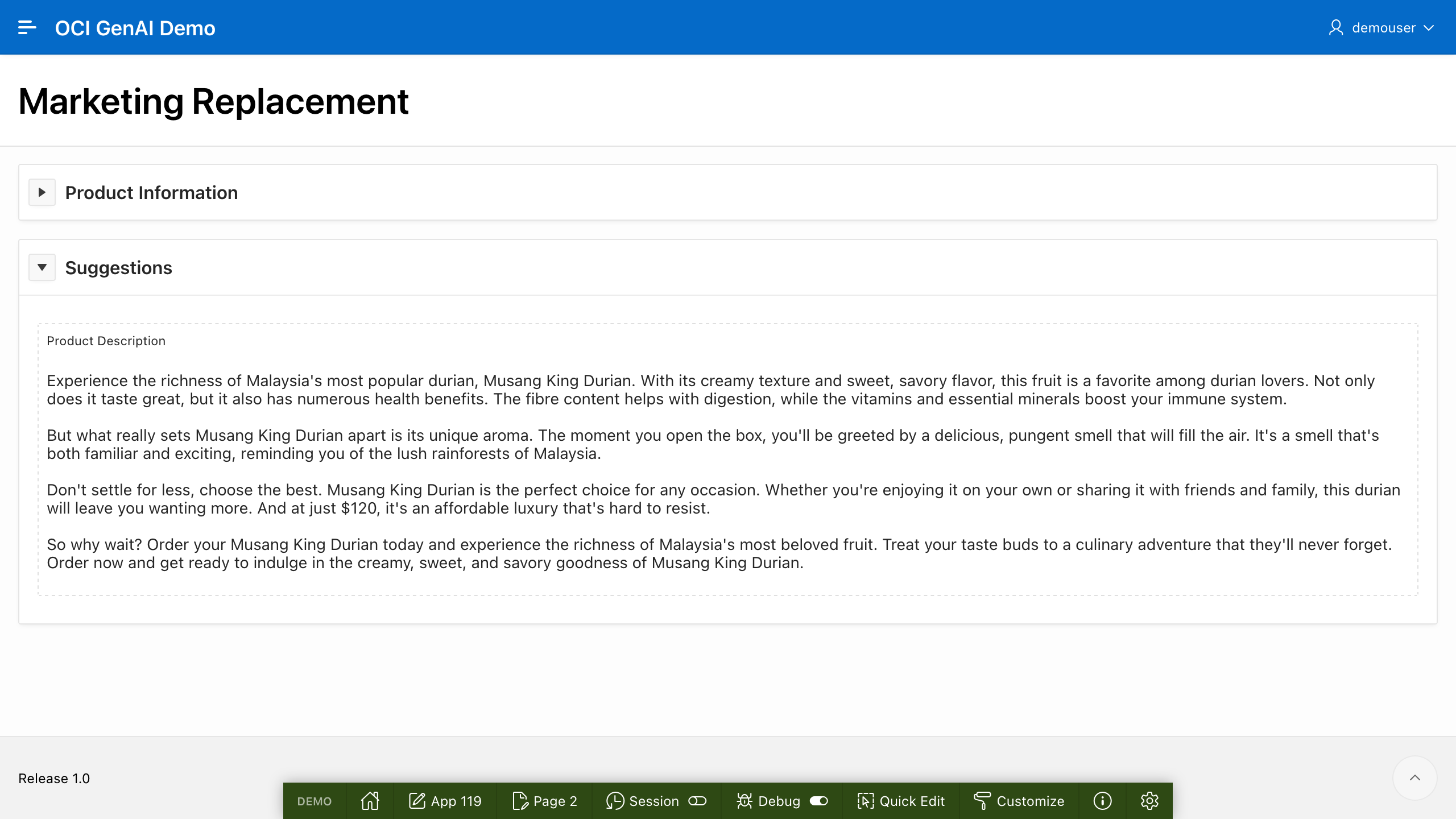

"text": "\\nExperience the richness of Malaysia's most popular durian, Musang King Durian. With its creamy texture and sweet, savory flavor, this fruit is a favorite among durian lovers. Not only does it taste great, but it also has numerous health benefits. The fibre content helps with digestion, while the vitamins and essential minerals boost your immune system. \\n\\nBut what really sets Musang King Durian apart is its unique aroma. The moment you open the box, you'll be greeted by a delicious, pungent smell that will fill the air. It's a smell that's both familiar and exciting, reminding you of the lush rainforests of Malaysia. \\n\\nDon't settle for less, choose the best. Musang King Durian is the perfect choice for any occasion. Whether you're enjoying it on your own or sharing it with friends and family, this durian will leave you wanting more. And at just $120, it's an affordable luxury that's hard to resist. \\n\\nSo why wait? Order your Musang King Durian today and experience the richness of Malaysia's most beloved fruit. Treat your taste buds to a culinary adventure that they'll never forget. Order now and get ready to indulge in the creamy, sweet, and savory goodness of Musang King Durian.",

"finishReason": "stop"

}

]

}

}

And the outcome? Hordes of people buying durians from Contoso Food Enterprises Inc.!

But hey, there's just NO WAY this app's going to replace any human in marketing. They are one of my most favourite people to work with. 😄 Authentic creativity results from unique personalities and experiences.

Parting Words

It's 2024, and demo apps like these aren't newsworthy anymore, and definitely not as cool as Michelle Skamene's meal planning app. However, it is important to demonstrate that for an Oracle technology professional, we now have a cloud-native, easy-to-use AI-powered service to create digital transformative solutions on our favourite ecosystem, the Oracle Cloud, and of course, Oracle APEX.

What will you build tomorrow?

As always, if you are interested to discuss your unique business challenge, please reach out to me by booking a time slot.

Credits

- Banner image generated using Stability AI's Stable Diffusion model.