Train an AI Model to Recognize Oracle APEX Challenge Coins

Doug Gault had suggested a while ago that I could bring more awareness to the Oracle APEX Challenge Coin project if I had an Oracle APEX application that plots the location of each sponsored coin on a world map. I agree. However, aside from a lack of time, I had also wanted to find interesting ways for awardees to submit their claim of ownership. Over the last week, I had worked on making this dream of a "claims" map a reality with the use of the Oracle Cloud Infrastructure (OCI) Vision AI service, more specifically, its custom model training feature.

Here's an early preview of the claims process in the Claim My Oracle APEX Challenge Coin application.

Background

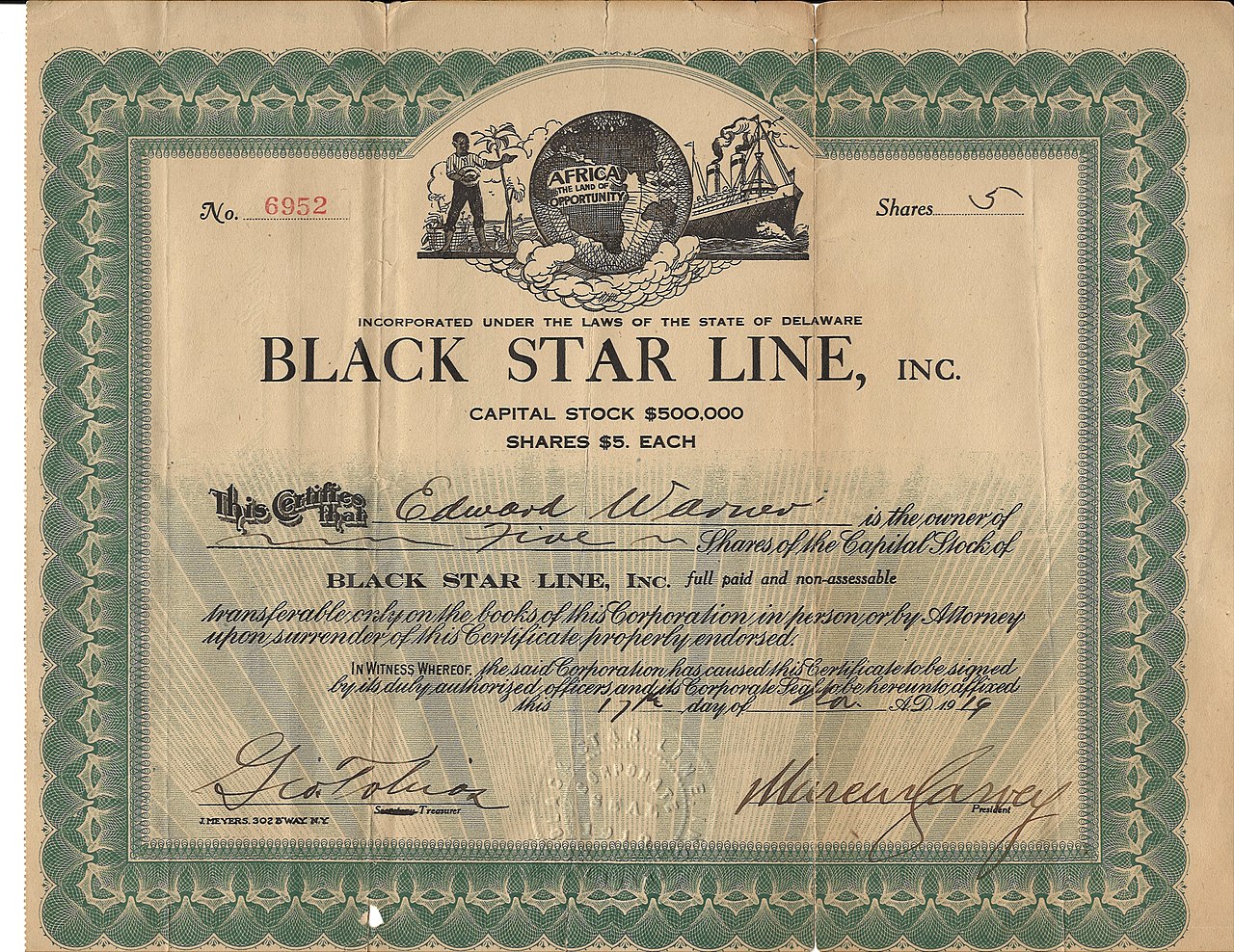

For those new to Oracle APEX, and those who have not been acquainted with the Oracle APEX Challenge Coin, it was a project I had started in early 2019 to support and acknowledge the value that developers bring to the world community. I had designed and minted a total of 200 coins over two years, and have since distributed almost all of them. As of now, there are only two more coins available for sponsorship. Read the original article for more details and how to sponsor these challenge coins.

OCI Vision

The OCI Vision is one of several AI services available on the OCI. Three pre-trained models are available for developers to create intelligent applications that need to perform computer vision tasks, such as, image and text recognition. Using the pre-trained models, developers can use the OCI REST API to perform image classifications, object detections, and text detections. It also included document processing tasks like key-value pair extraction, and document classification. However, it has since been spun off to a separate offering called Document Understanding. If you are interested to know more about using Document Understanding in Oracle APEX, please check out this Oracle LiveLabs workshop that I published in July, 2023.

OCI Vision uses deep learning (DL) approaches, and one of the key features of DL is the ability to perform transfer learning. TL;DR, transfer learning is process that uses pre-trained, generalist models, and then perform additional training over specialised datasets. This significantly reduces the amount of time, and training data, needed to train a machine learning (ML) model for specific operational needs. For example, recognising an Oracle APEX Challenge Coin! The OCI Vision's custom model feature allows developers to fine-tune and train highly specialised computer vision (CV) models to perform either image classification or object detection tasks.

In this project, I will train a custom model for detecting the front and back sides of the coin.

Prepare the Dataset

Availability of large and high quality datasets are crucial to training good CV models that have high precision and recall. Since the project was launched, recipients have spontaneously posted their cherished gifts on social media. Hence, my first challenge was to scour the Internet for these photographs. There are only 200 coins ever minted, and not every recipient has posted a photo of their coin on social media. Thankfully, I was able to harvest about slightly over one hundred of such images. They include photos of the front and back of the coin, different lighting scenes, pose, and proportional sizes.

A prerequisite for performing custom model training is to create a data set in the OCI Data Labeling service. This is an OCI managed service that lets data scientists and developers to curate and label data that will be used to perform supervised ML training. Creating an image classification and object detection models is a supervised learning task.

Before creating the dataset, assemble and upload all the images to an OCI Object Storage bucket. Once the images have been staged, the dataset created, and the records generated, you will need to work through all the images to mark and label the dataset records. Here's a quick demonstration of the data labelling process for object detection use:

Custom Model Training

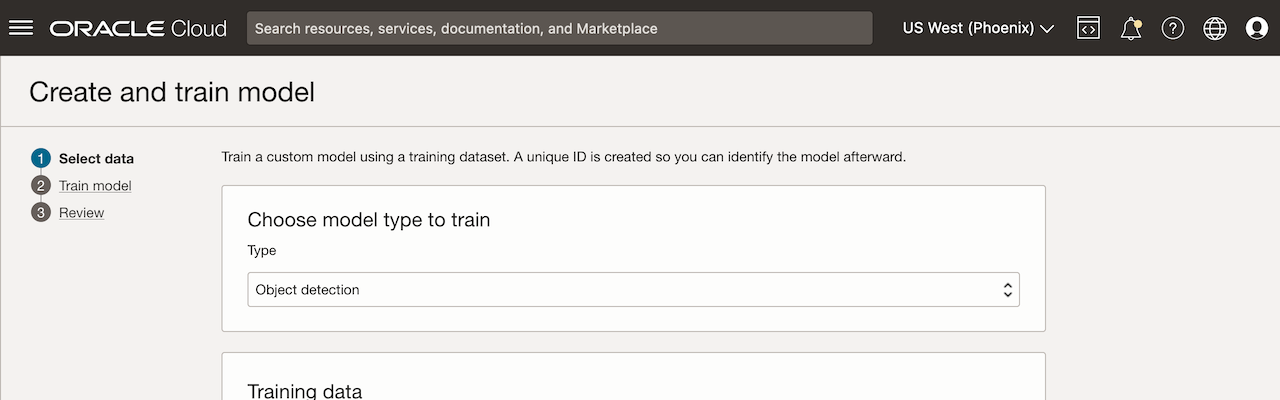

As mentioned earlier, the OCI Vision custom models feature allows data scientists and developers to easily fine-tune a inference model for specialised use cases. After creating the dataset and labelling the 100+ images, next step is to create a project and then a custom model. Choose the appropriate model type for your business use case. For this application, I chose Object detection, and then moved on to select the dataset I had created with the Data Labeling service.

There are more options to consider, including the desired training duration time. There are three options available: recommended, quick, and custom modes. In the early phases of the model training, I'd recommend using the quick mode, just to get a sense of how well the model might perform, given the quality and quantity of labelled samples in your dataset.

After the training is completed, the model detail will provide you the necessary metrics to decide if you need to repeat the process. The precision and recall are measurements typically used for judging the performance of ML models. If your model scores poorly, consider sourcing additional data for training and testing, or applying image transformations. Increasing the training duration may help, but sometimes, over-fitting a ML model may yield poorer results.

For convenience, in the lower half of the custom model details page, you will find a simple user interface for applying the custom model to source images.

Using the Custom Model in an Application

The custom model is trained to recognise both the front and back of an Oracle APEX challenge coin. However, for this application, I will only need it to detect the back side of the coin. In addition to object detection, I will also have OCI Vision to perform text detection (optical character recognition), and extract the unique serial number found on every coin.

The flow of the application is as follows:

- User begins a new claim using a wizard.

- User uploads a photo of the back of the coin, and chooses the unique serial number. A unique claims identifier is issued, and together with the submitted information, it is added to an APEX collection.

- In the next step, the user will optionally provide a display name that will be rendered in the map. The user will also use the Oracle APEX geocoded address functionality to set the estimated location of the coin.

- User confirms the details to be submitted.

- Upon confirmation, the claim is created in the database.

- The uploaded image is stored in the designated OCI Object Storage bucket.

- The claim is then evaluated using the OCI Vision using its OCI REST API. This involves an HTTP

POSTto the endpoint:https://vision.aiservice.{{REGION}}.oci.oraclecloud.com/20220125/actions/analyzeImage(substituted{{REGION}}with the desired region). - The results of the REST call is evaluated. If the desired label and serial number text is found, with the minimum confidence score, then the claim's validation status is marked

PASS. Otherwise, it is markedFAIL. - The map is refreshed, only displaying claims where the validation status value is

PASS.

This AnalyzeImage endpoint expects a JSON payload described by the AnalyzeImagesDetails. This payload is sent in the request body. Here's what it looks like:

{

"compartmentId": "ocid1.compartment.oc1..<REDACTED>",

"image": {

"source": "OBJECT_STORAGE",

"namespaceName": "<REDACTED>",

"bucketName": "claims-bucket",

"objectName": "submission_20230904011039.jpg"

},

"features": [

{

"modelId": "ocid1.aivisionmodel.oc1.phx.<REDACTED>",

"featureType": "OBJECT_DETECTION",

"maxResults": 10

},

{

"featureType": "TEXT_DETECTION",

"maxResults": 10

}

]

}

The image attribute describes the image source, including the bucket name, and unique object name. Next, the features attribute is an array of JSON objects describing the type(s) of image analysis to perform. The first feature is of type OBJECT_DETECTION. Specifying the modelId attribute containing the custom model's OCID, will instruct the endpoint to use the custom model, instead of the pre-trained object detection model. The second calls the pre-trained text detection model.

A response for a valid claim would look like this (truncated for readability):

{

"imageObjects": [

{

"name": "Back",

"confidence": 0.94813126,

...

}

],

...

"imageText": {

"words": [

...

{

"text": "A0035",

"confidence": 0.9957846,

...

}

],

...

}

The imageObjects attribute returns an array of up to 10 (set in the maxResults attribute of the request body) objects detected. Each contains the label name and corresponding confidence score. Similarly, the imageText returns an array of words (and lines). Each containing the text extracted, and its confidence score. These findings are then used to make an initial assessment if the claim is valid.

Summary

The application is still very much in its infancy. So far, working on this project has not only introduced me to the world of OCI Vision custom model training, but also some newer Oracle APEX features that I have yet to use. These includes the Map region and Geocoded Address page item. Next, I'd like to use the Approvals Component to implement a simple workflow that adds a human reviewer to make a final determination, and approve the claim.

Picture from the Wikimedia Commons.