I Am Obsolete

When I was first introduced to generative adversarial networks (GANs), I was impressed, but yet not impressed. The concept, performance, and outcomes of these advanced machine learning (ML) algorithms were truly jaw-dropping, but easily abused. And so, when everyone was excitedly chatting about ChatGPT, I was cynical and tried to tune out until I came across a comment by an old acquaintance, following a news article on concerns about ChatGPT and potential plagiarism in school.

It's like someone using a calculator in an arithmetic exam, ChatGPT is just another tool.

That statement got me rethinking my stance on the technology, and curious about its utility in my day-to-day job.

And I'm glad I did. My own exploration of this subject started by reading Sau Sheong's post to get a general idea about ChatGPT and the underlying technology that drives its ability to generate text and images. After intelligent agents and artificial neural Networks, natural language processing (NLP) was the next machine learning (ML) topic that I was passionate about. There wasn't a full module on that subject back when I was doing my graduate school training in knowledge engineering techniques, so it was pretty much a touch and go. Though during the pandemic, in 2020, I did take up a Coursera/DeepLearning.AI course on Tensorflow that covered NLP in one of its modules. There I got my legs deeper in the water and learned more in-depth about recurrent neural networks (RNN), long short-term memory (LSTM), and Bidirectional Encoder Representations from Transformers (BERT). And the keyword transformers is what's driving algorithms such as the Generative Pre-trained Transformer (GPT) used by ChatGPT.

Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. They allow a model to effectively process input sequences of variable length and attend to certain parts of the input while generating output. In other words, they enable the model to understand the context and meaning of words and sentences. This is achieved by using self-attention mechanisms, which allow the model to weigh the importance of different parts of the input when making predictions. This has led to significant improvements in the performance of models on a wide range of NLP tasks such as machine translation, text summarization, and question answering. Overall, the transformer architecture has been a major step forward in the field of NLP, and has been widely adopted in state-of-the-art models.

I don't know enough yet to go any deeper than flashing our buzzwords, and a lot of this is still over my head. I found this video to be very clear and easy to understand these concepts quickly, so have a watch!

Writing Content and Code

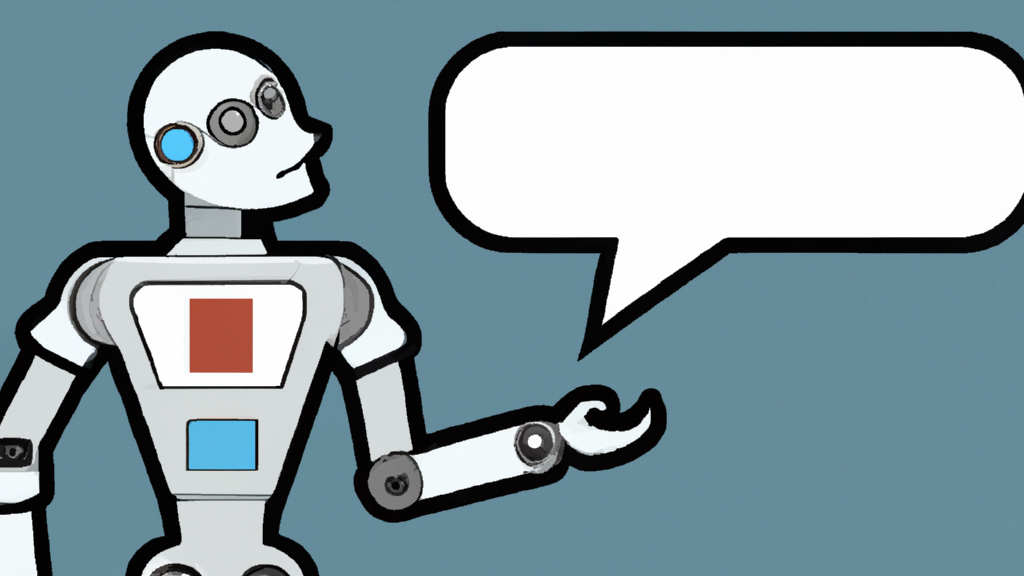

When I first started on this blog post, I thought about using OpenAI's DALL-E model to generate an image that I could use in the banner. Unlike models such as StyleGAN, DALL-E is a a transformer language model that uses text and images as inputs to infer and generate a new image based on text inputs.

Here's what I did:

- Sign up for a free account to access OpenAI's API.

- Create an API key.

- Setup Conda and create a Jupyter notebook.

- In the first cell, call the Create image API adapting from the example code in the documentation:

The parameter

import openai

openai.organization = "org-abc.....def"

openai.api_key = "averylongstringthatshouldbekeptasecret"

response = openai.Image.create(

prompt = "A robot with a large speech bubble."

, n = 10

, size = "1024x1024"

)

print("Done")promptis the descriptive text about the image that I'd like the model to generate. I am also requested for10images using the parametern, and finally, chose the desired image size using the three available values:256x256,512x512, or1024x1024. - It's been a while since I played with image manipulation in Python and then displaying it in a notebook, so I enlisted ChatGPT's help and asked:

And after thinking for a while it returned this:

create code in python to display images loaded from a URL in a grid in a jupyter notebookGreat, but that's not going to work out-of-the-box, so I adapted it to suite my needs:from IPython.display import display

from PIL import Image

import requests

urls = ['https://www.example.com/image1.jpg', 'https://www.example.com/image2.jpg',

'https://www.example.com/image3.jpg', 'https://www.example.com/image4.jpg']

images = []

for url in urls:

response = requests.get(url)

images.append(Image.open(response.content))

widths, heights = zip(*(i.size for i in images))

total_width = sum(widths)

max_height = max(heights)

new_im = Image.new('RGB', (total_width, max_height))

x_offset = 0

for im in images:

new_im.paste(im, (x_offset,0))

x_offset += im.size[0]

display(new_im)Running this cell generated a "thumbnail" gallery of sizefrom IPython.display import display

from PIL import Image

import requests

images = []

for suggestion in response["data"]:

_response = requests.get(suggestion["url"], stream = True)

images.append(Image.open(_response.raw))

widths, heights = zip(*(i.size for i in images))

total_width = sum(widths)

max_height = max(heights)

new_im = Image.new('RGB', (total_width, max_height))

x_offset = 0

for im in images:

new_im.paste(im, (x_offset,0))

x_offset += im.size[0]

display(new_im)10240x1024.

- After identifying my choice, the first one, I ran the following code (that I got lazy and asked ChatGPT again for help) to save it on my workstation:

urllib.request.urlretrieve(response["data"][0]["url"], "banner-image.jpg")

Here's the result after cropping it to a ratio of 16:9 that I typically use for banner images:

Neat huh?

I'll publish a full walk-through on what I did to setup Conda and Jupyter in my new section Tech TnT soon.

And what about textual content? In case you did not notice earlier, the blurb describing what transformers are were deliberately italicized. That's because I got ChatGPT to write a few words. I borrowed the idea from yet another great read written by Cassie Kozyrkov.

Here's the prompt I used:

Explain what are transformers in natural language processing using the style of Adrian Png.

Did it express what I wanted to say? Perhaps, and here are some key aspects:

- Transformers are a type of neural network, and used for natural language processing tasks.

- A new concept and meaning to the word "Attention", and how it's used in NLP.

- List the types of use cases.

Whenever I have a writers' block, I would pen my thoughts sparsely and randomly, and then reorder and pad them with the required sentence constructs. Let's try doing that, this time including the term positional encoding. Here's the updated prompt:

Write a passage explaining what transformers are in natural language processing. Capture the following key points:

- Transformers are a type of neural network, and used for natural language processing tasks.

- Mention positional encoding.

- A new concept and meaning to the word "Attention", and how it's used in NLP.

- List the types of use cases.

Transformers are a type of neural network architecture that have revolutionized the field of natural language processing. These models are designed to effectively process input sequences of variable length and generate output by using self-attention mechanisms. This allows the model to weigh the importance of different parts of the input when making predictions, which is crucial for understanding the context and meaning of words and sentences. A key component of transformers is the use of positional encoding, which allows the model to understand the order of the words in a sentence, even when the input is processed in parallel. This was a new concept and meaning to the word "Attention" when it was first introduced in NLP.

There are many use cases for transformers in NLP, including but not limited to machine translation, text summarization, question answering, and text-to-speech synthesis. The transformer architecture has been widely adopted in state-of-the-art models and has led to significant improvements in performance on a wide range of NLP tasks.

I don't know if I could express it as well as it did, but hey, I like it. But do I like using it? Not really. I'm still not fully comfortable with using the text as-is. I like my content to be authentically "me" with my language flaws included. However, how is this different from engaging a professional copywriter to write an article based on the ideas you provide?

Who Owns the Content

I think this is a question that is often ask and debated upon. Who owns the generated code, images, or text? For DALL-E, their content policy and FAQ seems to say that you are free to use the generated images, however, my immediate concern was about the work that it was derived upon. That appears to be explained here. I'm not good with legalese, and so, my level of comfort using the images generated is 4 on a scale of 1 to 10. And I'd presume that the concerns about generated code and text are very similar.

The "CANIUSE" in Oracle APEX

Yes! For those new to Oracle APEX, this is a rapid application development platform that lets you create usable web applications very quickly. It won't take you long to create an intelligent web application that takes advantage of the OpenAI models. The models are exposed as REST endpoints, and Oracle APEX has the all-powerful APEX_WEB_SERVICE API that lets developers consume REST services.

A quick example of how I'd submit source code submitted through an Oracle APEX page and returned an explanation of what the code is attempting to do:

declare

l_prompt varchar2(32767) := q'[---

{{CODE}}

---

Here's what the code does:']';

l_request_body json_object_t;

l_response clob;

begin

l_request_body := json_object_t();

l_request_body.put('model', 'code-davinci-002');

l_request_body.put('temperature', 0.40);

l_request_body.put('prompt', apex_escape.json(replace(l_prompt, '{{CODE}}', :P2_SOURCE_CODE)));

l_request_body.put('stop', '---');

l_request_body.put('max_tokens', 250);

apex_debug.info(apex_escape.json(replace(l_prompt, '{{CODE}}', :P2_SOURCE_CODE)));

apex_web_service.set_request_headers(

p_name_01 => 'Authorization'

, p_value_01 => 'Bearer averylongstringthatshouldnotbehardcoded'

, p_name_02 => 'OpenAI-Organization'

, p_value_02 => 'org-donothardcodethisaswell'

, p_name_03 => 'Content-Type'

, p_value_03 => 'application/json'

);

l_response := apex_web_service.make_rest_request(

p_url => 'https://api.openai.com/v1/completions'

, p_http_method => 'POST'

, p_body => l_request_body.to_string()

);

-- Code to parse the JSON response.

end;

We can do a lot of cool stuff in terms of functionality. However, these OpenAI models are also capable of providing a quick review of the code. However, it seems like the Codex model is fairly limited when it comes to PL/SQL. I had better results running this through GPT-3 to get a security and performance review of this pipelined table function:

create or replace type typ_animal_names is table of varchar2(50)

/

create or replace function find_animals_by_species(p_species_name varchar2)

return typ_animal_names

pipelined

is

begin

for animal in (

select animal_name from animal where species = p_species_name

) loop

pipe row (animal.animal_name);

end loop;

end;

/

And at the end, I provided this prompt:

-- Suggest security and performance improvements to this code.

---

What do you think it would say? Perhaps I should have masked the outputs and asked Steven Feuerstein for his opinion. He did write about this topic quite some time ago. Anyways, here's what I got from GPT-3 after a few tries.

/**

Security:

1. Add a check to ensure that the input parameter is valid and does not contain any malicious code.

2. Add a check to ensure that the user has the necessary permissions to execute the function.

Performance:

1. Create an index on the species column of the animal table.

2. Use bind variables for the input parameter to ensure that the query is not re-parsed each time it is executed.

3. Use a cursor to fetch the results from the query instead of looping through the results.

4. Use bulk collect to fetch the results from the query instead of looping through the results.

5. Use a PL/SQL table instead of a pipelined function*/

If you're interested, here are the parameters I had set:

- Model -

text-davinci-003 - Temperature -

0.3 - Maximum Length -

150

It would be nice to automate this as ChatGPT suggested, but I find the responses are not consistent, and sometimes, a little odd. That said, it would still be a good first attempt at scrutinizing the code. Consider it a helper when paired with a more experienced developer.

Pitting Against OCI AI Services

I asked ChatGPT to:

List all possible uses of OpenAI transformers.

And here's the response I received:

- Natural Language Processing (NLP) tasks such as language translation, text summarization, and sentiment analysis.

- Chatbots and virtual assistants for customer service and support.

- Text-to-speech and speech-to-text applications.

- Image and video captioning.

- Language generation for creative writing and content creation.

- Automated question answering systems.

- Automatic text classification and tagging.

- Text completion and prediction.

- Named Entity Recognition (NER) and part-of-speech tagging.

- Text-based search and retrieval systems.

- Semantic similarity and relatedness measurements.

- Personalization and recommendation systems.

- Language understanding for intelligent agents and robots.

- Text-based summarization of news articles and other long-form content.

- Text-based sentiment analysis for social media monitoring and marketing.

The final topic I'd like to explore is how OpenAI's product offerings compare to the services provided by Oracle AI. And to do that, let's first attempt to prune the list of 15 use cases into these buckets:

- Question and answer

- Content (text, images, and code) generation

- Text-to-speech

- Speech-to-text

- Named entity recognition

- Sentiment analysis

- Image and video captioning

- Language translation

- Text/document classification

- Keyword extraction and tagging

- Text summarization

| Functionality | Language | Speech | Vision | Document Understanding |

|---|---|---|---|---|

| Question and answer | ||||

| Content generation | ||||

| Text-to-speech | ||||

| Speech-to-text | ||||

| Named entity recognition | ||||

| Sentiment analysis | ||||

| Image and video captioning | ||||

| Language translation | ||||

| Text/document classification | ||||

| Keyword extraction and tagging | ||||

| Text summarization |

The areas where Oracle AI is clearly lacking are in content generation and text summarization. As to "text-to-speech", it does not appear to be in the list of APIs that OpenAI has, so I wonder where that suggestion came from. Perhaps ChatGPT is pulling a fast one?

So, I thought I'd ask ChatGPT the same question that I'm trying to answer:

How does Oracle AI compare with ChatGPT?

And this is what it said:

Oracle AI and ChatGPT are both natural language processing (NLP) technologies, but they have different capabilities and are used for different purposes.

ChatGPT is a transformer-based language model developed by OpenAI. It is primarily used for generating human-like text, such as in chatbots, virtual assistants, and language generation tasks. ChatGPT is trained on a large dataset of text, allowing it to generate text that is often indistinguishable from human-written text.

Oracle AI, on the other hand, is a collection of AI-based products and services offered by Oracle Corporation. It includes several NLP-based technologies, such as natural language understanding, text analytics, and chatbot building. Oracle AI is designed to be used in enterprise environments and can be integrated with other Oracle products and services.

In summary, ChatGPT is more focused on language generation, while Oracle AI is a broader set of AI-based products and services that can include NLP capabilities. Oracle AI is more focused on enterprise and business use cases, while ChatGPT is focused more on generating human-like text.

Apart from the first paragraph, I'd say that's mostly factual and accurate. Hence, I can only conclude that it's a rather difficult comparison to make. Duh! My main goal here was really to highlight how we could also use Oracle AI to provide solutions for some of these use cases.

Summary

Cool as this technology might be, there's a nagging fear that pretty soon, IT practitioners, like myself, would be replaced by AI. I think that fear is valid. However, as Sau Sheong aptly describes ChatGPT, these are tools and we should embrace and empower ourselves to do our jobs faster and better. It was only a few years ago when Oracle launched the Autonomous Database product. Back then, it was touted to be intelligent and sent shivers down the DBAs' spine. After almost four years, DBAs are still needed even when a company's running only ADBs in their environment. What it has done was take away some of the mundane tasks like database patching and upgrading (an introduced new ones) as advertised. Freeing us up to perform other functions, be innovative, and allowed us to discover other efficiencies and approaches to improve business operations.

I have used ChatGPT in the past few days to help me in certain tasks such as writing a French version of an email, and constructing some of parts of this blog post. I still had to validate and adapt the outputs, but more importantly, like in the case of writing this blog post, compose the flow and piece them altogether for the final content. I don't think ChatGPT is quite ready to replicate my thought processes, feelings/opinions, and validate the story I wished to tell.

And my last words are to thank the brilliant brains at OpenAI, Google, and all over the world for their contributions to computer science so that I can write this blog post with a bit more flavour. But please try not to make Judgement Day a reality. Thank you!

Photo by Volodymyr Hryshchenko on Unsplash