Watch Your Weight

A reader left an interesting comment/question in my previous post on Making the Move to the Autonomous Database:

"Would You explain how can i check my on-premise DB to satisfy ADB size limitation 20G? What is the size 20G of?"

This is an excellent question to ask before embarking on any Autonomous Database (ADB) migration plans and so I dug a bit deeper.

Folks moving applications and data from an Oracle Database Express Edition (XE) instance, version 11gR2 or 18c, will probably not have problems fitting into a Free Tier Autonomous Database (ADB) since database sizes are capped at 11 and 12 GB respectively. You are allowed a maximum of 20 GB user for each Free Tier ADB provisioned.

To estimate the data size to be migrated, execute the following query as SYS on the source database:

select

case grouping(owner) when 1 then 'Total' else owner end as owner

, round(sum(bytes) / power(2,10), 2) as space_usage_kb

, round(sum(bytes) / power(2,20), 2) as space_usage_mb

, round(sum(bytes) / power(2,30), 2) as space_usage_gb

from dba_segments

where owner in ('CONTOSO_APP', 'CONTOSO_WEB', 'LOGGER')

group by rollup(owner);

Output:

OWNER SPACE_USAGE_KB SPACE_USAGE_MB SPACE_USAGE_GB

-------------------- -------------- -------------- --------------

CONTOSO_APP 32,896.00 32.13 0.03

CONTOSO_WEB 6,720.00 6.56 0.01

LOGGER 1,280.00 1.25 0.00

Total 40,896.00 39.94 0.04

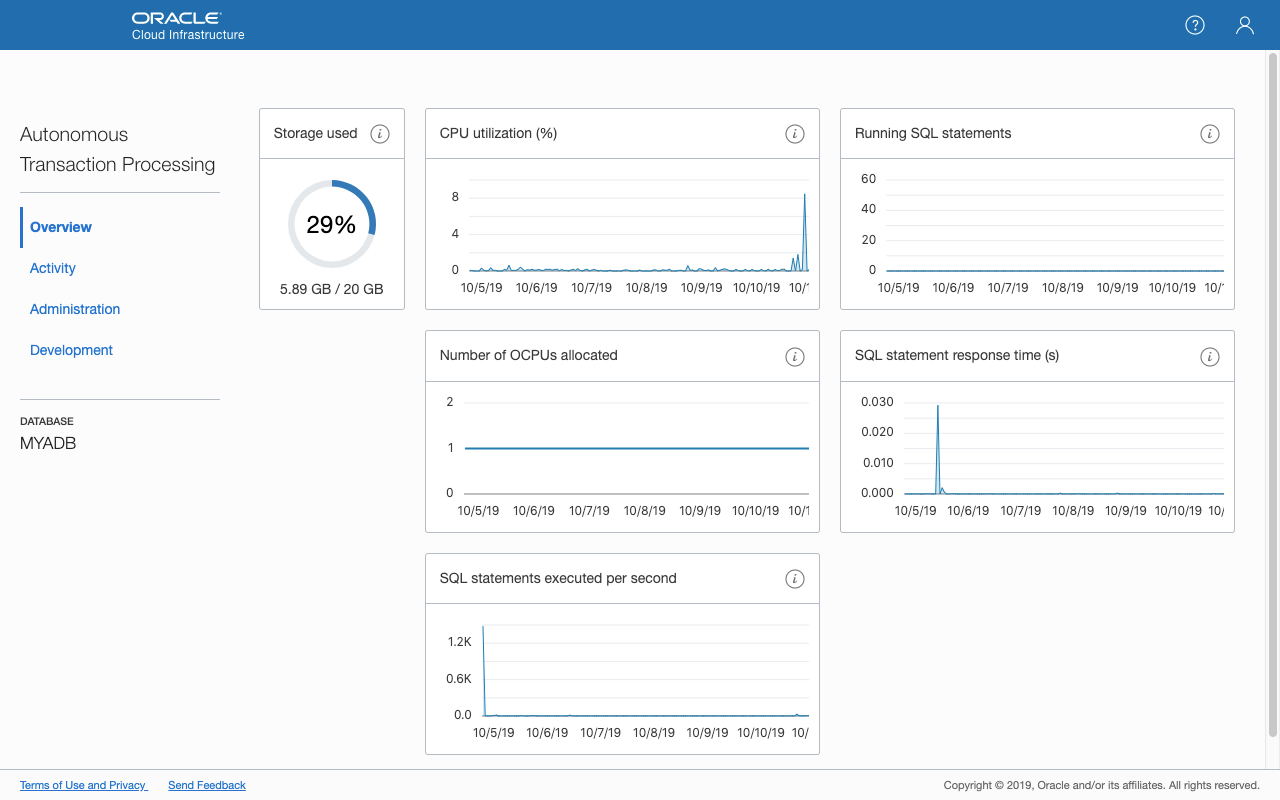

That's great! My toy application only requires about 40 MB of storage, but after importing the data into the ADB, I get this on the Service Console?

As it turns out, the total amount of storage used includes quite a few more components than anticipated. The following query should yield values that tally with the amount displayed on the Service Console.

select

case grouping(tablespace_name) when 1 then 'Total' else tablespace_name end as tablespace_name

, round(sum(bytes) / power(2, 30), 2) as total_size_gb

, round(sum(maxbytes) / power(2, 30), 2) as max_size_gb

from (

select tablespace_name, bytes, maxbytes

from dba_data_files

where tablespace_name != 'SAMPLESCHEMA'

union all

select 'TEMP' as tablespace_name, bytes, maxbytes

from dba_temp_files

)

group by rollup(tablespace_name);

Output:

TABLESPACE_NAME TOTAL_SIZE_GB MAX_SIZE_GB

------------------------------ ------------- -------------

DATA 0.10 32,768.00

DBFS_DATA 0.10 32,768.00

SYSAUX 2.07 32,768.00

SYSTEM 0.74 32,768.00

TEMP 2.03 6.00

UNDOTBS1 0.42 1.00

UNDO_2 0.42 1.00

Total 5.89 131,080.00

8 rows selected.

Based on the above output, it is clear that a more accurate measure of the ADB's storage footprint requires us to consider other "non-application" tablespaces, e.g. SYSTEM, SYSAUX, TEMP, UNDO and more!

The tablespace DATA is the default tablespace for schema users (think USERS tablespace) and is where your application data really lives. Given that TEMP, UNDOTBS1 and perhaps UNDO_2 have a max of 8 GB, it looks like application data really needs to remain within the confines of 12 GB. And even so, more needs to be understood about how the other tablespaces might grow as the database is used, before we can fully appreciate how much room we have to maneuver.

Oracle Advanced Compression

If you have used Oracle Advanced Compression, available in 18c XE, then your tables should remain compressed. While an Automonous Transaction Processing (ATP) database does not compress tables by default, it will do so if specified. For Autonomous Data Warehouse (ADW), compression is enabled by default.

NOTE:

If you wanted to get some data to try out the following steps, use the

CUSTOMERStable in schemaSHthat should be available to all ADBs:

create table customers_sample as select * from SH.customers where rownum < 10000;

First, check if the table already has compression enabled. Execute this query as the schema user:

```sql

select table_name, compression, compress_for

from user_tables

where table_name = 'CUSTOMERS_SAMPLE';

Output:

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------------------------

CUSTOMERS_SAMPLE DISABLED

Next, estimate the amount of storage used by this table:

select

segment_name

, bytes / power(2, 10) as size_kb

, bytes / power(2, 20) as size_mb

, bytes / power(2, 30) as size_gb

from user_segments

where 1 = 1

and segment_name = 'CUSTOMERS_SAMPLE'

and segment_type = 'TABLE'

;

Output:

SEGMENT_NAME SIZE_KB SIZE_MB SIZE_GB

------------------------------ ------------ ------------ ------------

CUSTOMERS_SAMPLE 3072.00 3.00 0.00

The DBMS_COMPRESS.GET_COMPRESSION_RATIO is a built-in proceedure that could be used to estimate the amount of space savings. The code below checks the compression ratio for table CUSTOMERS_SAMPLE with Advanced Row Compression.

declare

c_schema_name constant varchar2(20) := 'CONTOSO_WEB';

c_object_name constant varchar2(20) := 'CUSTOMERS_SAMPLE';

l_blkcnt_cmp pls_integer;

l_blkcnt_uncmp pls_integer;

l_row_cmp pls_integer;

l_row_uncmp pls_integer;

l_cmp_ratio number;

l_comptype_str varchar2(32767);

begin

dbms_compression.get_compression_ratio(

scratchtbsname => 'DATA'

, ownname => c_schema_name

, objname => c_object_name

, subobjname => null

, comptype => dbms_compression.comp_advanced

, blkcnt_cmp => l_blkcnt_cmp

, blkcnt_uncmp => l_blkcnt_uncmp

, row_cmp => l_row_cmp

, row_uncmp => l_row_uncmp

, cmp_ratio => l_cmp_ratio

, comptype_str => l_comptype_str

);

dbms_output.put_line('Compression ratio = ' || l_cmp_ratio);

end;

/

Now add compression to the CUSTOMERS_SAMPLE table:

alter table customers_sample

move compress for all operations;

The MOVE clause allows you to encrypt existing data in the table, but bear in mind that there is sufficient amount of space for the transition. Don't perform this operation if you are already running out of space!

Check the table properties and storage sizes again:

SQL> select table_name, compression, compress_for

2 from user_tables

3 where table_name = 'CUSTOMERS_SAMPLE';

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------------------------

CUSTOMERS_SAMPLE ENABLED ADVANCED

SQL> select

2 segment_name

3 , bytes / power(2, 10) as size_kb

4 , bytes / power(2, 20) as size_mb

5 , bytes / power(2, 30) as size_gb

6 from user_segments

7 where 1 = 1

8 and segment_name = 'CUSTOMERS_SAMPLE'

9* and segment_type = 'TABLE';

SEGMENT_NAME SIZE_KB SIZE_MB SIZE_GB

------------------------------ ------------ ------------ ------------

CUSTOMERS_SAMPLE 2048.00 2.00 0.00

Looks like I didn't achieve the amount of compression that was anticipated, but something is definitely better than nothing. There are other compression options I could use to achieve higher amounts of space savings, but deciding on the right approach is obviously more involved. The examples here are meant to demonstrate what is possible with ADBs.