A Yearful of AI But What's Next?

In the last one year since OpenAI popularised generative AI, a lot of the excitement and intrigue have been centred on the generative power of large language models (LLMs) or foundational models. Understandably so. However, the generative power of this models only cover a segment of business needs. The underlying promise of these frontier models is in its ability to "understand" natural language, and that to me, is where we will find more utility.

For the last few years at Insum, we've had an almost biannual tradition of reviewing what's been going on in the AI/ML space, in particular, around what Oracle has been doing to provide innovative solutions and tools. If you missed those, I have provided the links below. In the absence of an updated review, I am hoping that this final 2023 blog post would serve somewhat as a stopgap.

In late 2020, we did an interview with world-renowned ML expert, Heli Helskyaho, where we discussed about AI and its impact on society.

Monty Latiolais prophetically asked about whether AI could be used to improve developer productivity. At Insum, we had looked at GitHub CoPilot in its beta days, thanks to Federico Gaete. However, at that time, we found that it provided limited help to us as it was trained over public repositories, and PL/SQL is overwhelmingly underrepresented. We haven't given up.

It is exciting that Oracle is working towards a "no code" platform 😉. In this blog post, the authors outline the various facets where AI will assist Oracle APEX and citizen developers create applications and write SQL code. And not to forget, on the Oracle Autonomous Database, there already is Select AI. I had written previously on my experience, and am still anxiously waiting for the feature that allows you to use a self-hosted LLM.

In early 2022, I did a one-to-one interview with Marc Ruel, and we had discussed how we can quite easily build "AI APEX applications" by calling the various Oracle Cloud Infrastructure (OCI) AI services' REST APIs, and/or use AutoML to train and deploy in-database ML models.

Have you tried them out?

OCI Generative AI

IMPORTANT DISCLAIMER

While I, the author, am an active participant in Oracle's Generative AI service beta programme, it is important to clarify that the following content does not contain any exclusive or proprietary information that is not already publicly documented in Oracle Cloud Infrastructure (OCI) documentation. Oracle makes extensive resources available to its users, and I encourage everyone to review these materials for accurate and up-to-date information regarding the capabilities and usage of the Generative AI service. The intention of this text is to provide an example or context rather than to disclose any confidential or undisclosed features.

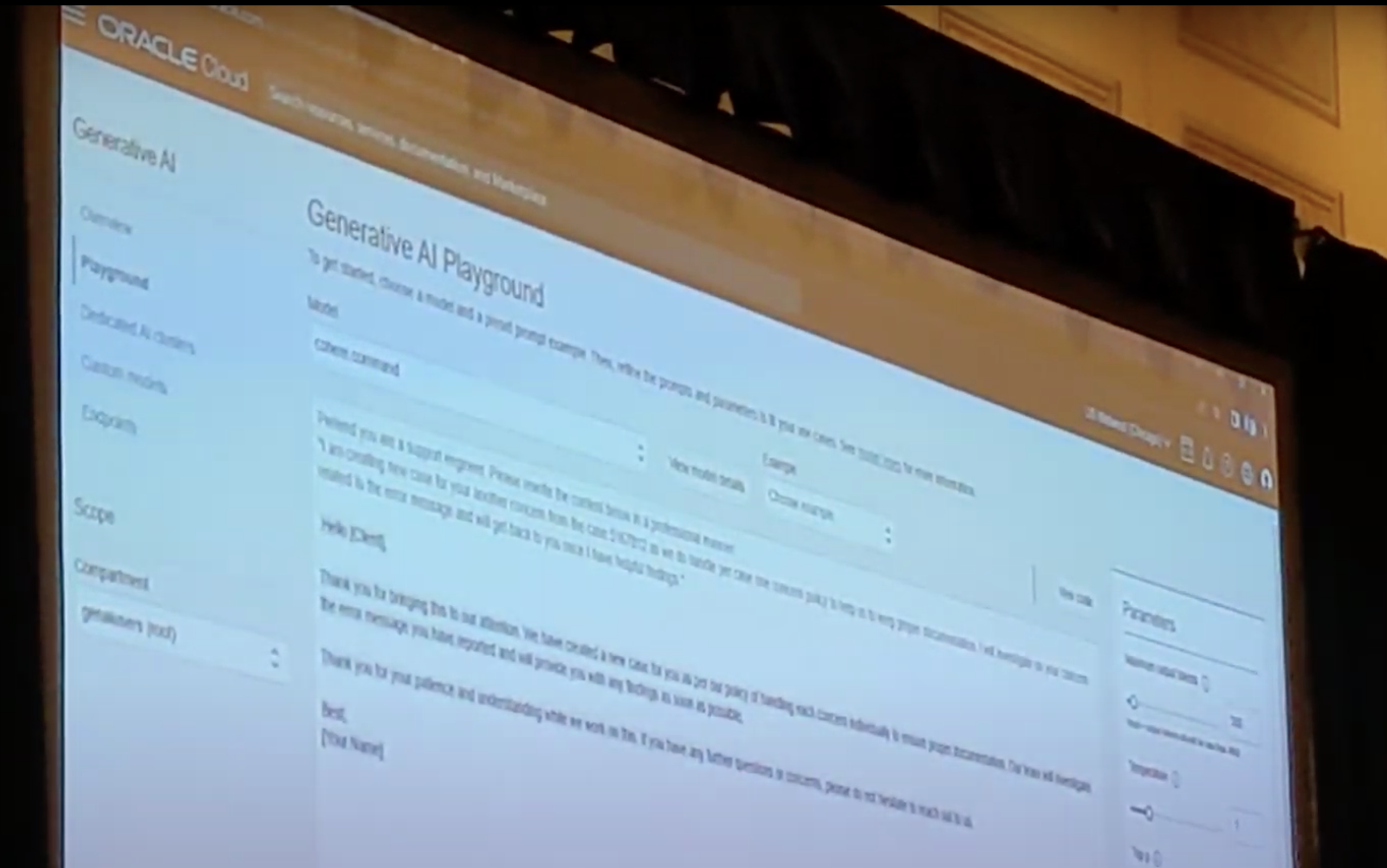

2024 will be a year that we should see Oracle releasing new services and features that target the generative AI space. One of them is the Oracle Generative AI (GenAI) service, a cloud native service that was first revealed at Oracle CloudWorld 2023. I was privileged to have attended an introductory session by Luis Cabrera-Cordon, where he demonstrated some of its features using the OCI Console.

If you've used any other similar "LLM-as-an-API" service, then the user interface would be familiar to you. It allows you to enter a prompt and adjust parameters like temperature and maximum tokens.

The playground on the OCI Console looks like a great tool to give the feature a quick spin, try things out, and to see how it works. However, the real proof of the pudding is using it inside an application, for example, in Oracle APEX. And like the other Oracle AI services, you can call these models using the OCI REST APIs.

From the API documentation, the key endpoints that us developers would look at using are

- GenerateTextResult

- SummarizeTextResult; and

- EmbedTextResult

Like other OCI REST APIs, the required and optional parameters are passed to the endpoints as a JSON payload in the request body, and the results will be returned as a JSON response.

{

"compartmentId": "ocid...",

"input": "The text to be summarised.",

"servingMode": {

"servingType": "ON_DEMAND",

"modelId": "???"

},

"additionalCommand": "Additional instructions for the model.",

"extractiveness": "AUTO",

"format": "PARAGRAPH",

"isEcho": "true",

"length": "MEDIUM",

"temperature": 1

}

The attributes are pretty well documented. I am particularly interested in the serviceMode attribute. The value can be either of the type OnDemandServingMode or DedicatedServingMode. Both have the common attribute servingType that is a string value of either ON_DEMAND or DEDICATED. The on-demand serving mode additionally requires the attribute modelId that you can obtain by querying the endpoint ListModels (under ModelCollection in the documentation). This returns a list of elements of type ModelSummary. I would assume that the required model identifier can be found in the id attribute.

The dedicated serving mode has endpointId that points to a dedicated AI cluster that you can provision to perform fine-tuning. These are (likely) GPU compute clusters that allow us to fine-tune a base LLM over proprietary datasets in order to achieve more relevant and precise results.

Calling and processing the GenAI endpoints will be easily accomplished with the Oracle APEX PL/SQL routines. This will probably work:

apex_web_service.g_request_headers(1).name := 'Content-Type';

apex_web_service.g_request_headers(1).value := 'application/json';

l_response := apex_web_service.make_rest_request(

p_url => 'https://generativeai.aiservice.us-chicago-1.oci.oraclecloud.com/20231130/actions/summarizeText'

, p_http_method => 'POST'

, p_body => json_object(

key 'compartmentId' value 'ocid...'

, key 'input' value 'The text to be summarised.'

, key 'serviceMode' value json_object(

key 'servingType' value 'ON_DEMAND'

, key 'modelId' value '???'

)

, key 'additionalCommand' value 'Additional instructions for the model.'

, key 'extractiveness' value 'AUTO'

, key 'format' value 'PARAGRAPH'

, key 'isEcho' value 'true'

, key 'length' value 'MEDIUM'

, key 'temperature' value 1

)

, p_credential_static_id => 'MY_OCI_CREDENTIALS'

);

And after that, all we need to do is parse the results, and there you have it, an AI app! 😉

{

"id": "ocid...",

"input": "The text to be summarised.",

"modelId": "???",

"modelVersion": 1.0,

"summary": "The summarised text that we wanted."

}

Finally, since these models are from Cohere, besides prompt engineering and fine-tuning, I am hoping that we can also perform some in-context learning as well. For example:

Review:

It's a great camera system!

We are able to save video clips, and it's easy to set up.

We have our motion alerts on high sensitivity, and we receive any motion alerts, which is amazing. The camera quality seems to be clear on our end, including any sounds. We can see everything even at night.

We purchased 2 cameras on sale on Cyber Monday. Amazing deal! Highly recommend

Rating:

Five

Review:

Not happy with these cameras. You start off by having to sign up for a free 30 day subscription trial. If you stay on the trial you don’t actually know how the USB storage option works for recorded videos (if you don’t want to continue with a monthly subscription for a monthly fee). So, I cancelled my free subscription so I could try the usb storage option rather than pay for subscription. This also allows me the flexibility to return it within 30 days. Interesting that you cannot “pause” free trial and reinstate later. Once cancelled you lose your free trial which seems like a gimmick.

The USB (not included) does work but, retrieving the videos is very slow and trying to access the cameras in “live” view sometimes does not work.

The daytime quality of cameras is ok but the night time quality is poor. Also, they are not weather resistant. I have mine protected under roofline but most mornings the cameras are blurry because of condensation despite having them in a somewhat protected area. We did also put one on a fence as they shown on the website but quickly had to remove it because of condensation build up (when it wasn’t even raining).

Will be returning. Not happy at all.

Rating:

One

Review:

It's a decent camera system without a live view option for multiple cameras. Important: this kit includes the Sync Module already, I didn't see that listed anywhere so I bought separately the sync module and ended up with 2 of them.

Pros:

- Able to communicate with people with 2-way communication.

- Able to save video clips.

- Easy to set up

Cons:

- No constant live view. It's not like a traditional camera security system where you look at a monitor and see multiple cameras with a live view. You need to click on each camera and it will (slowly) load the view at that camera.

- I haven't received any motion alerts. I have the sensitivity up high, I selected zones, I did everything they said to do and still no alerts for motion.

- The camera quality is not like it is shown on the photos. The photos on Amazon are taken with a high end camera and made to look like they're the view of these cameras. It is nothing like the photos shown. Picture a digital camera from 2010. That is the image you'll get.

Rating returned as a json object with one attribute named rating:

That then returns a result like this:

{

"rating": 3

}

Acknowledgement

The text in the examples are actual reviews taken from Amazon.

At the moment, it seems to me that creating applications using Large Language Models (LLMs) remains an expensive endeavor due to reliance on GPUs and ongoing supply chain issues, there are promising signs of change with growing interest in alternatives like Intel ARC and AMD Radeon. This diversification of hardware options offers hope for more accessible and affordable fine-tuning opportunities in the near future. Nevertheless, let us not forget to keep a close eye on the ecological impact of these advancements.

Oracle AI Vector Search

Unlike the GenAI service, not a lot has been published on this upcoming Oracle Database 23c feature. And I might stress "upcoming". When news first broke (also) at Oracle CloudWorld 2023, it wasn't clear if the vector data type and its powerful search features were part of the 23c general availability (GA) that was announced at the same time. If you missed it, here's the press release.

Embeddings and Knowledge Graphs

In the absence of publicly available content on the AI vector search database feature, let's focus instead on an important concept, embeddings. Embeddings in LLMs are a vector of numbers that somewhat represent meaning and context in a body of text. These vectors are derived from the parameters or the weights of a LLM used to generate these embeddings. They can be used to encode and decode both inputs and outputs of your inferencing task. These vectors are what powers "semantic" search and Retrieval Augment Generation (RAG), and based on the press release, we can expect to perform these tasks when the AI vector search feature becomes generally available.

While I await for AI vector search to be generally available, there are a few frameworks and alternate vector database solutions that I am currently looking at, including most recently, the newly release OCI Cache with Redis service that didn't work out as I had expected. 😦 More on that in a 2024 blog post.

One other area that I am looking to spend some time on in 2024 is on the subject of knowledge graph embeddings. I first learned about the Semantic Web and the power of ontologies, knowledge representation, and linked data at a 2006 bioinformatics conference in Boston, where I crashed on a special interest group meeting at the MIT Computer Science & Artificial Intelligence Laboratory. Aside from seeing the back of Sir Tim Berners-Lee's, I was truly inspired by the brains around this subject, and remembered listening to a presentation on what would later become Freebase.

In my Kscope17 presentation, Enriching Oracle APEX Applications with Semantics, I described simple changes to how data is presented so that software agents may access and consume data meaningfully. As we continue to produce yottabytes of data, we should not neglect the importance of curating, documenting, and enriching (and securing) data. Data without meaning is going to be garbage. And the essence of embedding meaning in data is ever increasingly important in this new era of AI. I'm placing my bets that the development and use of knowledge graphs and frontier models is the next disrupter in the AI space. If anyone's looking for a part-time PhD candidate, can I please volunteer? :raising_hand:😉

Summary

It's been an accelerating year for AI developments and I don't think anyone's going to see the end of it in 2024. For me personally, I continue to be conflicted with the current developments and hype around generative AI. While it presents a lot of promise, it shouldn't dwarf the usefulness of traditional ML approaches that I feel still provides a lot of immediate utility. It is also seemingly a technology for those who can afford it, and it has potential to take jobs away from people who do what some might call mundane.

Take the banner art that I use for my previous few blogs for example. In case it wasn't obvious enough, I have relied on generating the images using the Stable Diffusion XL model, when I used to scour online repositories of royalty-free photos. For more professional websites, I'm sure they source these from companies selling stock art from contributors, providing a source of income for people passionate about photography or graphic art.

AI is great, and I'm passionate about what it can do for civilisation. But in 2024, and in the years following, I do hope that we can all continue to remember that technology should be used to improve the lives of all, and not some.

With that, I'll end this blog post and 2023. For those whom I have had the privilege of working with this year, a huge "Thank you". It's been a great year, and I look forward to our continued collaboration to make this world a better place for you, me, and those whom we serve.

Happy New Year Everyone!

祝大家新年快乐!

Bonne Année à Tous!

あけましておめでとう皆さん!

Photo Credits

The photo used in the banner image was taken by me quite recently. This is a winter sunrise with the mountains in the backdrop. Moving from big cities into a remote community in the far North has been humbling, and a reminder that I am a very small piece in this humongous puzzle.