Speak My Language

It's been too long since I last posted anything on my blog. Thanks to Tim Hall, I am writing again, after a long pause, to celebrate Joel Kallman Day 2022. My contribution today is about my deep appreciation for the team behind the Oracle Cloud Infrastructure (OCI) AI Services. Below is a quick scoop on how I have used these services to improve on my OCI-powered e-flashcard for learning new words in a different language. I will be doing a quick demonstration (session LRN3673) of this work at the upcoming Oracle CloudWorld 2022.

The E-Flashcard Solution

A TL;DR for my initial article on the e-flashcard solution.

On my fridge, I have a M5Paper that pulls a random English word or phrase from an Oracle Autonomous Database. The word/phrase and the Japanese translation are entered using an Oracle Application Express (APEX) and exposed as a REST service using Oracle REST Data Services. The translation task was performed manually using a tool like Google Translate.

Then Dimitri Gielis made this suggestion:

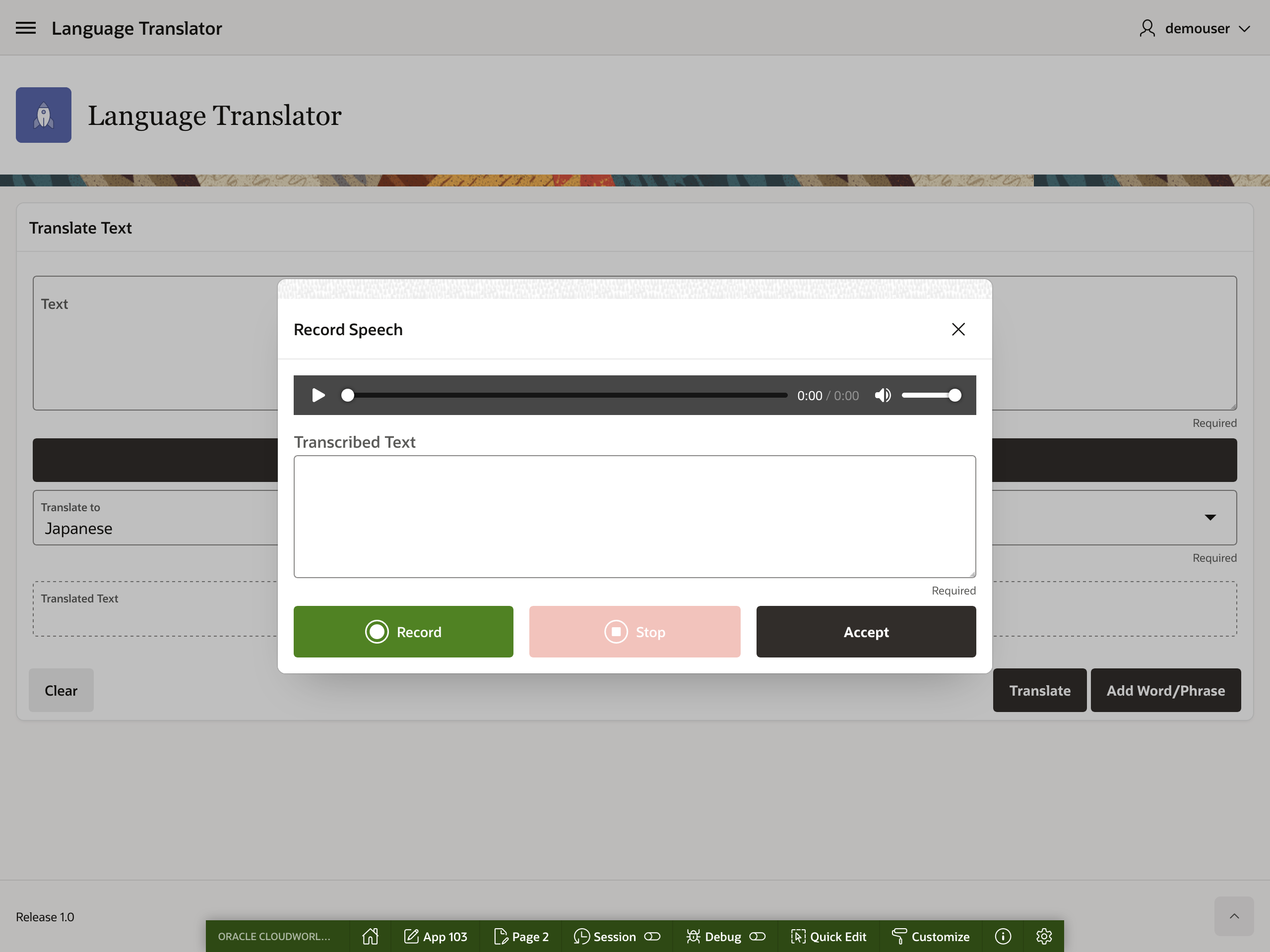

The second version of my implementation isn't quite driven by Amazon Alexa yet, but at least for the web interface, I had added a voice input functionality that allowed me to speak a word and translate it to a language of my choosing.

Voice => APEX => Speech Service => Language Service => DB => ORDS => M5Paper

Speech Service

Released in early 2022, the Speech Service is one of several OCI's AI Services that uses Automatic Speech Recognition (ASR) technology to transcribe audio content to text. Initially, the service was very limited in what audio formats it received, specifically, it had to be single channel at a sample rate of 16,000 Hz, encoded in PCM, and saved in the WAV format.

The strict requirements made it difficult to implement using a purely web-based interface. One would have to capture the audio using the Media Recorder API and any of its supported codecs, transcode it using FFmpeg, executed using Oracle Cloud Functions, and then submitted a Speech transcription job.

I then looked at other solutions that used Web Assembly to perform the audio format purely in the browser, such as Opus MediaRecorder and FFMPEG.WASM. Unfortunately, I ran out of time, got busy, and had to put this project on hold.

Thankfully, months later, a newer version of the service was released. Apart from the new languages supported, it also allowed users to submit audio files in a other formats, several of which are supported by the Media Recorder API. Hurrah!

I kept most of the initial prototype and updated the Ajax Callback.

I will avoid making this post unreadable by not listing the source code of the PL/SQL procedure I wrote, but here's the pseudocode:

- Upload the recorded audio as a BLOB and store it in OCI Object Storage.

- Create a transcription job.

- If successful, query the transcription job using the job's OCID.

- Loop until the job state is either

SUCCEEDED,FAILED, orCANCELED. - If the state is

SUCCEEDED, then get the transcription job's task and get the location of the results stored as an object in the Object Storage. - Retrieve the transcription results stored as JSON.

Language Service "V2"

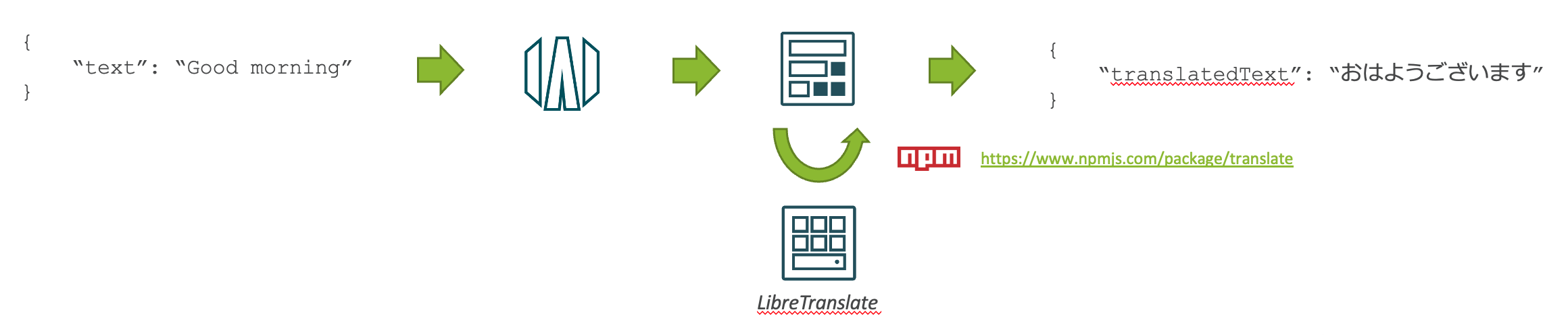

In Chapter 4 of the book "Extending Oracle Application Express with Oracle Cloud Features ", I describe how you can host and use a language translation service using a few OCI resources and an open sourced engine like LibreTranslate. Before getting limited access to the next generation of Language Service, my solution looked like this:

Since then, I have abandoned all of that for a simplified solution using the Language pre-trained models for text translation. As there is limited availability, so is the documentation. Fortunately, the service also comes with an OCI Console-based user interface to try out the service. With that, I was able to figure out what I needed to call the service in the APEX application through REST. Believe me, it's not difficult.

To the amazing people behind these products. Thank you very much.

これらの製品の背後にある素晴らしい人々へ。本当にありがとう。(Transcribed and translated by OCI)

Photo Credits