Use Package DBMS_CLOUD_REPO with GitHub

The Oracle Autonomous Database (ADB) includes a package named DBMS_CLOUD_REPO for developers, release managers, and database administrators to work with code repositories such as GitHub, AWS Code Commit, and Azure Repos.

Using this package, you may perform actions such as:

- Listing and managing repositories.

- List, commit to, and manage code on the repository.

- Export database schemas and objects directly to the repository.

- Checkout and execute SQL statements from files committed to the repository.

In this Tech TnT article, we will configure an ADB to interact with a private GitHub repository to:

- Backup by exporting table, view, and package definitions from the database.

- Create a table using a SQL script from the repository.

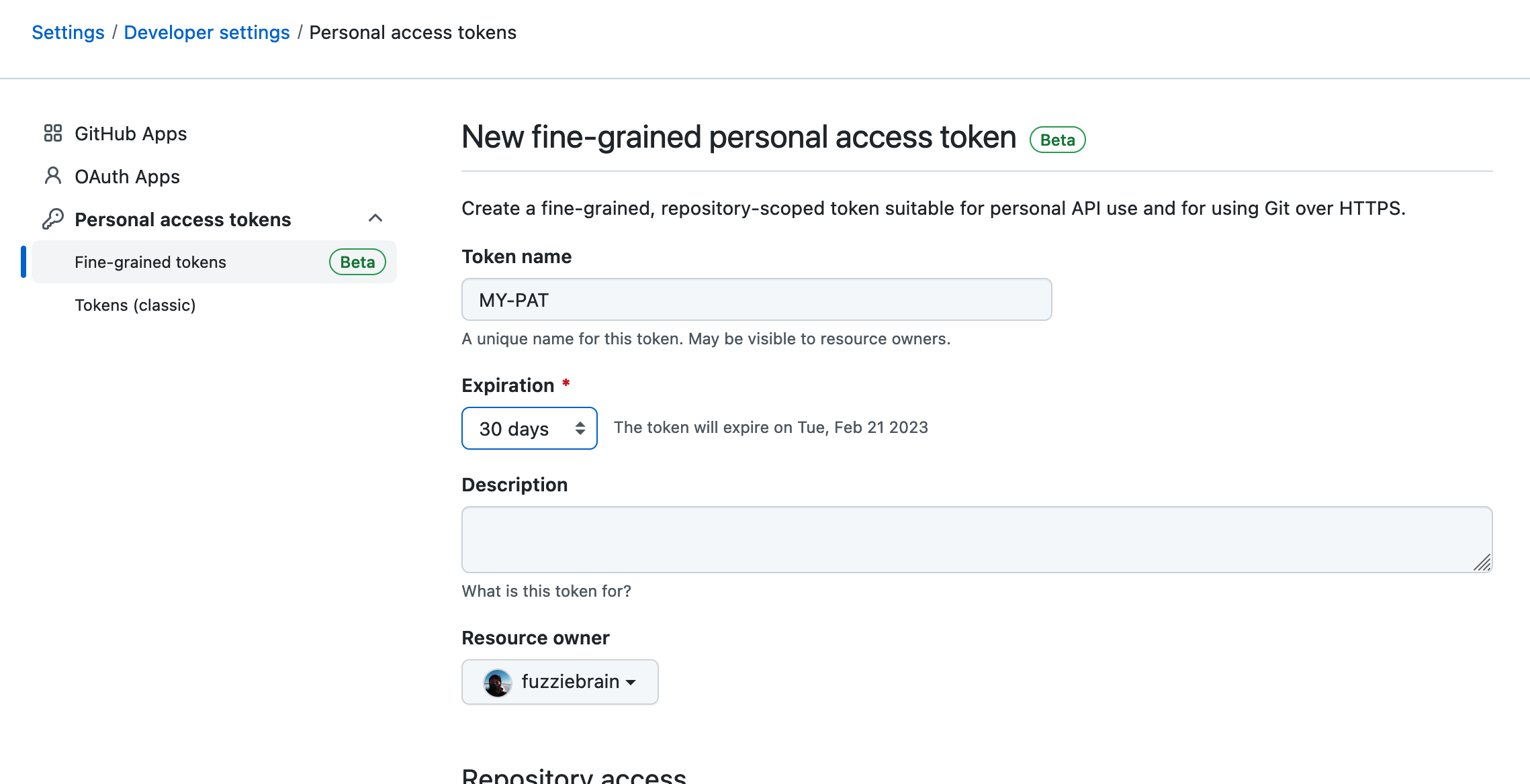

Task 1 - Create a GitHub Personal Access Token (PAT)

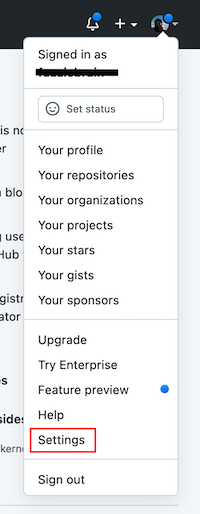

- Login to GitHub account.

- Click your user profile image on the top-right to reveal the dropdown menu, and then click Settings.

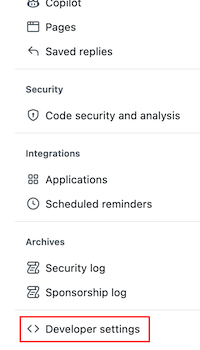

- On the next page, scroll down, and then click Developer Settings at the bottom of menu on the left-side of the page.

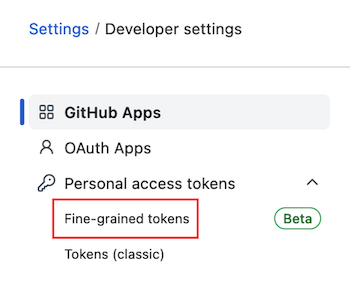

- On the developer settings page, expand the dropdown Personal access tokens, and then click Fine-grained tokens.

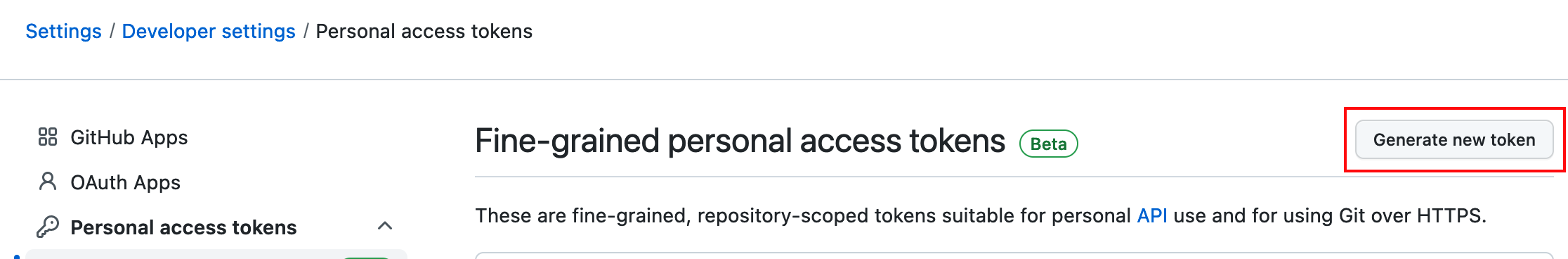

- Click the button Generate new token on the top-right.

- Provide a name and expiry date. Include a description if possible.

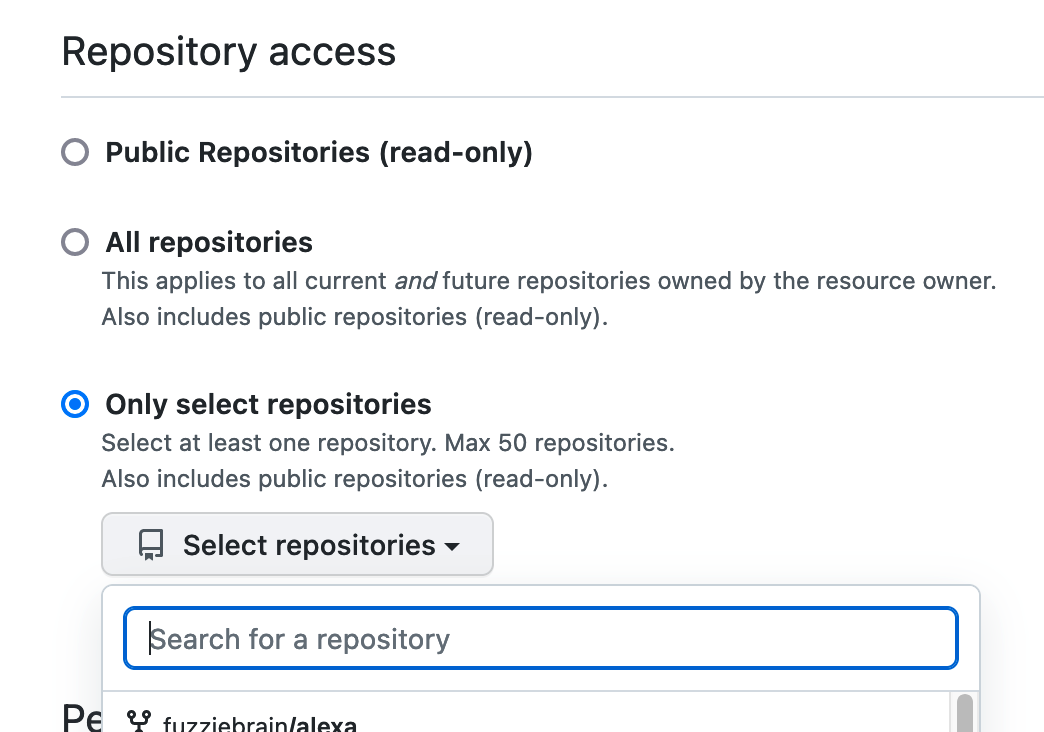

- Optionally, restrict the repositories that the API has access to using this PAT.

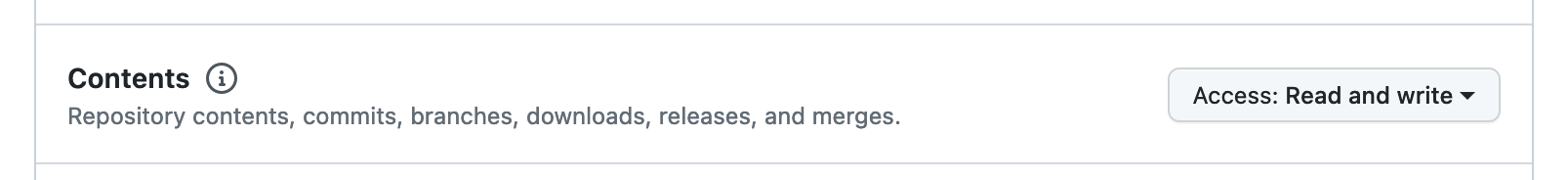

- Allow the contents of the repository to be read and written through the GitHub API using this PAT.

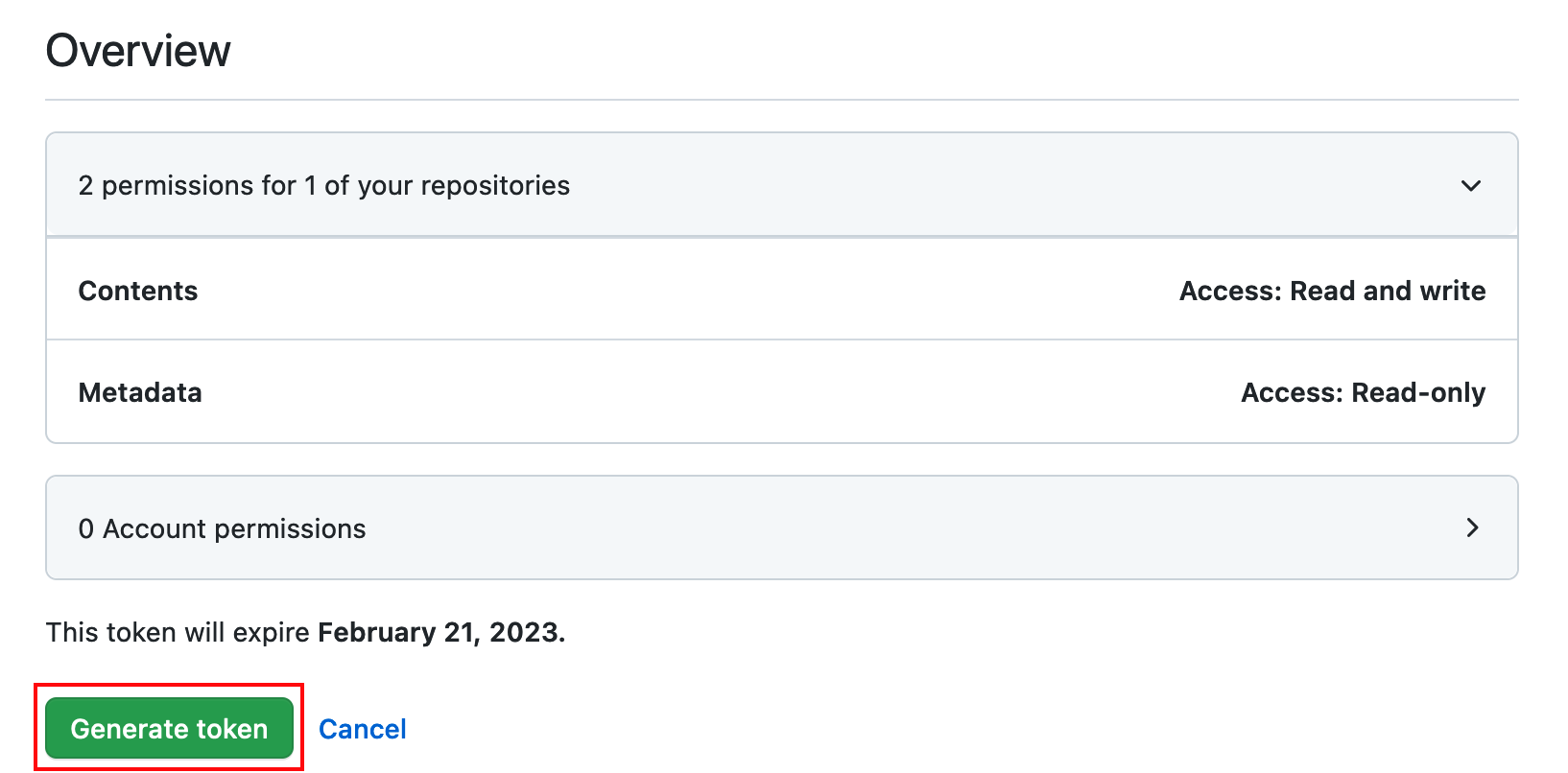

- Review the assigned permissions and then click the button Create token.

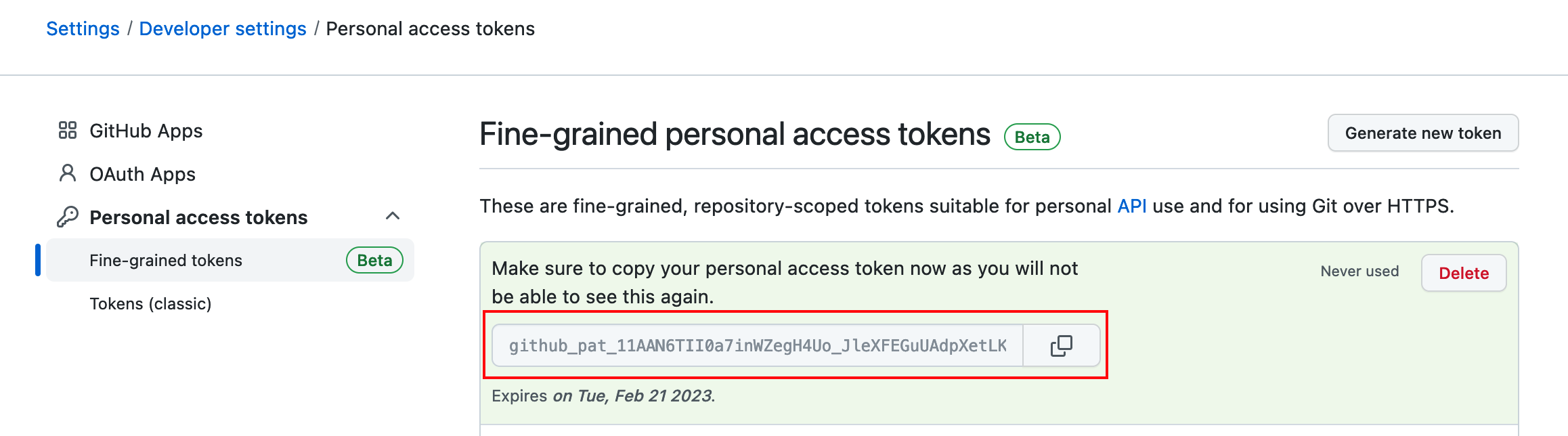

- the PAT is displayed only once after it is created. Copy the value and store it in a safe place.

Task 2 - Store PAT in a Cloud Service Credential

The GitHub personal access token (PAT) generated in the previous task should be stored as a cloud service credential in the ADB. This is performed using the DBMS_CLOUD.CREATE_CREDENTIAL procedure.

- List existing credentials in the ADB:

select *

from user_credentials;

- Create a credential for GitHub using the PAT.

begin

dbms_cloud.create_credential(

credential_name => 'GITHUB_CRED'

, username => 'mygithub@email.com'

, password => 'github_pat_...'

);

end;

/

Task 3 - Check Access to Repository

Next, validate the cloud service credential created in the previous task by executing the following code:

column name format a50;

var repo_handle clob;

begin

:repo_handle := dbms_cloud_repo.init_github_repo(

credential_name => 'GITHUB_CRED'

, repo_name => 'myrepo'

, owner => 'mygithubaccount'

);

end;

/

select name from dbms_cloud_repo.list_repositories(:repo_handle);

If the credential setup was successful, a list of repositories that the PAT has access will be displayed.

The above code snippet can be executed in SQLcl, SQL*Plus, or SQL Developer. However, when executing it in SQL Developer, execute the entire snippet as a script.

Task 4 - Export Schema Objects to Repository

With the credential setup completed and validated, you may now use the DBMS_CLOUD_REPO subprograms to export database objects to the GitHub repository. Execute the PL/SQL code below to iterate through the schema's tables, views, and the specifications and body of all packages, using the DBMS_CLOUD_REPO.EXPORT_OBJECT procedure. The DDL of each object type will upload the code in an appropriate subdirectory name. Amend the commit message, author, and email as required.

declare

l_repo_handle clob;

begin

l_repo_handle := dbms_cloud_repo.init_github_repo(

credential_name => 'GITHUB_CRED'

, owner => 'mygithubaccount'

, repo_name => 'myrepo'

);

for obj in (

select *

from user_objects

where object_type in ('TABLE', 'VIEW', 'PACKAGE', 'PACKAGE BODY')

) loop

dbms_cloud_repo.export_object(

repo => l_repo_handle

, file_path =>

case obj.object_type

when 'TABLE'

then 'models/' || lower(obj.object_name) || '.sql'

when 'VIEW'

then 'views/' || lower(obj.object_name) || '.sql'

when 'PACKAGE'

then 'packages/' || lower(obj.object_name) || '.pks'

when 'PACKAGE BODY'

then 'packages/' || lower(obj.object_name) || '.pkb'

end

, object_type =>

case obj.object_type

when 'TABLE' then 'TABLE'

when 'VIEW' then 'VIEW'

when 'PACKAGE' then 'PACKAGE_SPEC'

when 'PACKAGE BODY' then 'PACKAGE_BODY'

end

, object_name => obj.object_name

, object_schema => user

, branch_name => 'main'

, commit_details => json_object(

'message' value 'DBMS_CLOUD_REPO commit'

, 'author' value 'Name Used for Commits'

, 'email' value 'my.commit.address@email.com'

)

, append => false

);

end loop;

end;

/

Alternatively, use the DBMS_METADATA.GET_DDL procedure for fine-grain control of the data definition language (DDL) code generated, and then commit the code using the DBMS_CLOUD_REPO.PUT_FILE procedure.

Task 5 - Run SQL Code from Repository

In the final example, the procedure will checkout the file specified in the DBMS_CLOUD_REPO.INSTALL_FILE procedure's file_path parameter and execute in the database.

declare

l_repo_handle clob;

begin

l_repo_handle := dbms_cloud_repo.init_github_repo(

credential_name => 'GITHUB_CRED'

, owner => 'mygithubaccount'

, repo_name => 'myrepo'

);

dbms_cloud_repo.install_file(

repo => l_repo_handle

, file_path => 'models/mytable.sql',

stop_on_error => true

);

end;

/

If the file models/mytable.sql creates a table named MYTABLE, validate that it was successfully created in the database.